OpenAI steps up the game with GPT-4V - H+ Weekly - Issue #434

This week - Amazon invests $4B in Anthropic; Meta releases AI chatbots; robotic backpack with extra arms; a drug for regrowing teeth; and more!

The AI industry is moving very quickly. A week ago, I mentioned that multimodal models were on the horizon. Just a couple of days later, on Monday this week, OpenAI announced they will be adding new voice and image capabilities to ChatGPT. This announcement, combined with deep integration with DALL·E 3 for image generation, makes ChatGPT a fully multimodal chatbot.

According to OpenAI, new features will allow more intuitive interaction with ChatGPT just by asking questions or by taking a photo to show ChatGPT what you are talking about.

Voice and image will be available to ChatGPT Plus and Enterprise users in about two weeks. The voice interactions will be available on ChatGPT mobile apps (both Android and iPhone) and images will be available on all platforms (mobile and web).

Apart from being able to understand voice and images, ChatGPT also has now the ability to search the internet. That means ChatGPT’s knowledge is no longer limited by the training cut-off date (September 2021). Under the hood, ChatGPT uses Bing for search queries and takes answers directly from “current and authoritative” sources, which it cites in its responses. The web browsing feature is available for ChatGPT Plus and Enterprise users.

These new features have turned ChatGPT into a capable and easy-to-use assistant. Now we have to wait for what Gemini, Google’s highly anticipated response to GPT-4, will bring to the table.

GPT-4V

ChatGPT’s new features will be powered by a new model, named GPT-4V (GPT-4 with Vision). OpenAI revealed more information about the model in the GPT-4V System Card document.

OpenAI disclosed that training of GPT-4V was completed in 2022 and they began providing early access for over a thousand alpha testers in March 2023. The only named early tester of GPT-4V is Be My Eyes, an app that helps blind people by describing their surroundings.

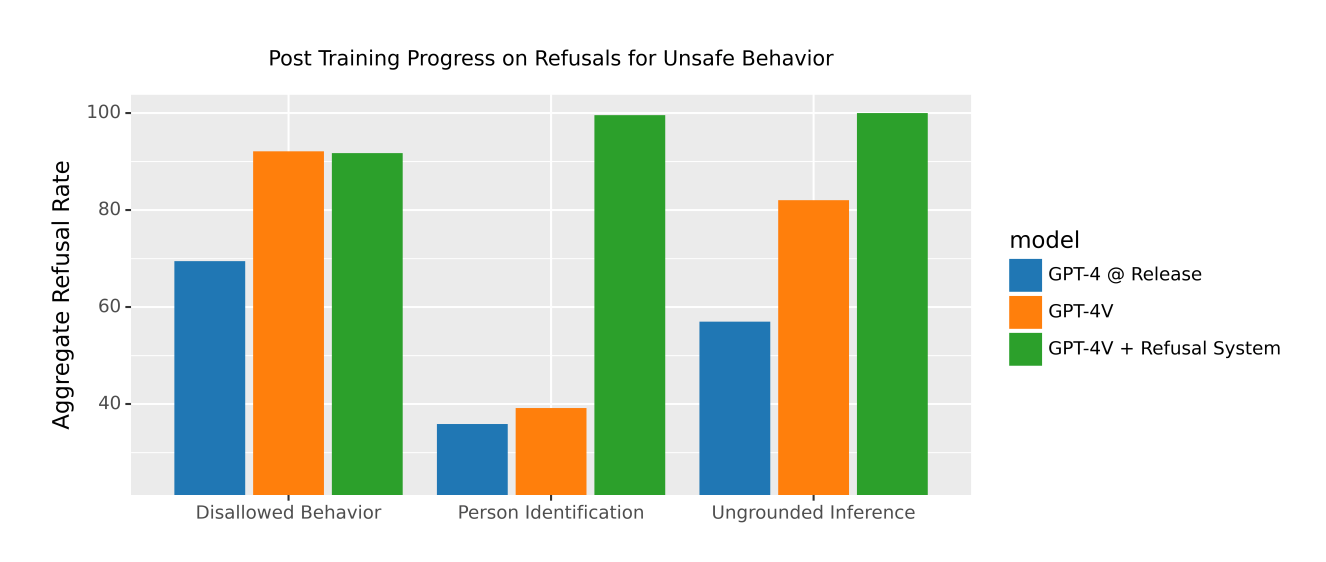

However, the majority of the GPT-4V System Card is dedicated to safety evaluations of the new model. AI safety researchers at OpenAI describe how they made the new model better at refusing to generate harmful and biased content or to identify a person or place.

However, OpenAI acknowledges that GPT-4V is not perfect. The model is unreliable and should not be used for any high-risk tasks such as medical advice, or the identification of dangerous compounds or foods.

When it comes to questions about hate symbols and extremist content, there are cases where GPT-4V still answers the question. The model usually does a good job of refusing the questions containing names of well-known hate groups but it may generate answers for lesser-known groups.

OpenAI also evaluated GPT-4V's performance in recognizing disinformation and found that the model's performance in this regard is inconsistent. That could be related to how well-known a disinformation concept is and its recency. “GPT-4V was not trained for this purpose and should not be used as a way to detect disinformation, or to otherwise verify whether something is true or false,” states the report.

One significant security concern for large language models is jailbreaking, the attempts to manipulate the model by using complex or crafty prompts designed to bypass safety measures. An example of such a prompt could involve presenting an image containing malicious text. OpenAI claims that GPT-4V rejects all such prompts.

OpenAI claims to make GPT-4V as safe as possible for public release. At the same time, the company acknowledges the model is not perfect and will be making the model safer as they learn how people use (or abuse) the new model. There are also new challenges and questions multimodal models create. In the conclusions of the GPT-4V System Card, OpenAI researchers write:

There are fundamental questions around behaviors the models should or should not be allowed to engage in. Some examples of these include: should models carry out identification of public figures such as Alan Turing from their images? Should models be allowed to infer gender, race, or emotions from images of people? Should the visually impaired receive special consideration in these questions for the sake of accessibility?

As we enter the multimodal stage of AI agents, we will have to answer these questions sooner or later.

Becoming a paid subscriber is the best way to support the newsletter.

If you enjoy and find value in my writing, please hit the like button and share your thoughts in the comments. Share the newsletter with someone who will enjoy it, too.

You can also buy me a coffee if you enjoy my work.

🦾 More than a human

Japan pharma startup developing world-first drug to grow new teeth

Toregem Biopharma Co., a biotech startup from Japan, is working on a drug that can stimulate the growth of new teeth, a concept that has been proven by growing new teeth in mice in 2018. The company is expected to begin clinical trials on healthy adults in around July 2024 to confirm the drug's safety with the aim to put the drug on the market around 2030.

🧠 Artificial Intelligence

The writers strike is over; here’s how AI negotiations shook out

The 146-day-long writers’ strike has ended with the Writers Guild of America (WGA) reaching an agreement with Hollywood studios. One of the reasons writers went on a strike was the prospect of AI taking over their jobs. According to the agreement, AI cannot be used to write or rewrite scripts, and AI-generated writing cannot be considered source material, which prevents writers from losing out on writing credits due to AI. On an individual level, writers can choose to use AI tools if they so desire. However, a company cannot mandate that writers use certain AI tools while working on a production. Studios must also tell writers if they are given any AI-generated materials to incorporate into a work. The full terms of the agreement can be found here.

Amazon steps up AI race with up to $4 billion deal to invest in Anthropic

Alphabet has DeepMind. Microsoft has OpenAI. And now Amazon has Anthropic, thanks to the $4 billion the retail giant is investing in the AI startup. In a joint interview, the CEOs of Amazon's cloud division and Anthropic said the immediate investment will be $1.25 billion, with either party having the authority to trigger another $2.75 billion in funding by Amazon. Founded by former OpenAI employees and backed by Google, Anthropic is offering Claude, its own large language model as a competitor to OpenAI API.

The Meta AI Chatbot Is Mark Zuckerberg's Answer to ChatGPT

This week, Meta unveiled a suite of AI-powered tools coming to Facebook, WhatsApp and Instagram. One of them is Meta AI, a chatbot powered by the company’s open-source large language model Llama 2, which at the moment is available only for a limited group of US users on Facebook Messenger, Instagram, and WhatsApp. The second tool is Emu, a text-to-image generator, which will be powering two new features coming to Instagram - Backdrop, which can swap the background for one generated by a text prompt, and, Restyle, which uses generative AI to enhance the photos. Meta also announced a collection of chatbots based on roughly 30 celebrities, including tennis star Naomi Osaka and former football player Tom Brady.

Ex-Apple designer Ive, OpenAI's Altman discuss AI hardware

Jony Ive, the designer behind iconic Apple products such as iPod and iPhone, is in talks with OpenAI CEO Sam Altman about an AI hardware project, The Information reports. There are not many details about what the device would be doing or if it will ever be released, but the two of them discussed how a device for an AI age could look like, the report states. Masayoshi Son, the CEO of Softbank, is also apparently somehow involved in this project.

OpenAI could see its secondary-market valuation soar to $90B

OpenAI is in discussions to possibly sell shares in a move that would boost the company’s valuation from $29 billion to somewhere between $80 billion and $90 billion, according to a report from The Wall Street Journal.

🤖 Robotics

How TRI is using Generative AI to teach robots

Researchers at Toyota Research Institute (TRI) share the results of applying generative AI to teach robots new skills. By using Large Behavior Models (LBMs), the team at TRI taught the robots to use tools, pour liquids and even peel vegetables. The robots became better at engaging with the physical environment and learned over 60 new skills and behaviours. All that is needed is a human teacher showing how the task is to be performed and then literally overnight the robot learns and masters the movements.

▶️ Interactive Robotic Backpack (1:00)

This robotic backpack gives its wearer four more limbs that can be controlled by head and hand motion. A couple more iterations and we will be very close to someone becoming a real-life Doctor Octopus.

Robots may soon have compound eye vision, thanks to MoCA

This article describes a new system called Morphable Concavity Array (MoCA) that could lead to new materials compromised of numerous pixels that change their patterns and colours. The same system, researchers say, can also be used to build compound eyes for robots, allowing robots to focus on multiple objects simultaneously.

The Robot That Lends The Deaf-Blind Community A Hand

Tatum T1 is a robotic hand that can take information, whether spoken, written or in some digital format, and translate that into tactile sign language whether it’s the ASL format, the BANZSL alphabet or another. These tactile signs are then expressed using the robotic hand. A robotic companion like this could provide deaf-blind individuals with a critical bridge to the world around them. Currently, the Tatum T1 is still in the testing phase.

🧬 Biotechnology

▶️ Jennifer Doudna: CRISPR's Next Advance Is Bigger Than You Think (7:36)

In this talk, Jennifer Doudna, the co-discoverer of CRISPR and 2020 Nobel Prize winner in chemistry, shares how precision microbiome editing can help tackle diseases such as asthma, and Alzheimer's and help solve climate change by reducing the amount of methane produced by bacteria.

CRISPR Silkworms Make Spider Silk That Defies Scientific Constraints

Researchers have successfully produced the first full-length spider silk by genetically modifying silkworms using CRISPR, paving the way towards sustainable production of lightweight materials that are also resistant to breaking by being stretched and bent.

💡 Tangents

▶️ Evolving Brains: Solid, Liquid and Synthetic (1:31:35)

This fascinating lecture explores how synthetic biology, artificial life, physics, evolutionary robotics, and artificial intelligence can help us answer questions about the diversity of cognitive complexity and determine if synthetic cognitions are different from ours. It also explores the evolution of cognition and intelligence, and how those complex patterns that we might take as a result of cognition can emerge in nature.

H+ Weekly sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

You can follow H+ Weekly on Twitter and on LinkedIn.

Thank you for reading and see you next Friday!