Gemini everywhere - Sync #520

Plus: Claude 4; Jony Ive joins OpenAI; Meta's problems with Llama 4 Behemoth; lenses that give infrared vision; Stargate data center in UAE; Regeneron to acquire 23andMe for $256 million; and more!

Hello and welcome to Sync #520!

What a week… It was one of those weeks when almost every day something happened. I thought the main event would be Google I/O and all the announcements Google has made (which we will take a closer look at), but then more things happened.

Seemingly out of nowhere, Anthropic released Claude Opus 4 and Claude Sonnet 4, the latest models in its Claude family. And if that weren’t enough, Jony Ive, the legendary Apple designer, has joined OpenAI to work on a new project.

Elsewhere in AI, reports have emerged that Meta is struggling with Llama 4 Behemoth, OpenAI has announced the Stargate data centre in the UAE, Klarna has used an AI avatar of its CEO to deliver earnings, and both Mistral and Windsurf have released their own AI models tailored for coding.

Over in robotics, Zoox, Amazon’s autonomous vehicle unit, is starting tests in Atlanta, and Tesla has showcased what new skills Optimus has learned, while its head of self-driving has admitted that the company is lagging a couple of years behind Waymo.

This week’s issue of Sync also features contact lenses that give people infrared vision, mice that grow bigger brains when given a stretch of human DNA, and an article exploring the world of 3D-printed ghost guns.

Enjoy!

And one more thing—I will be attending the Humanoids Summit in London on 29th and 30th May. If you happen to be there too, let me know!

Gemini everywhere

Google was caught off guard by the sudden rise of OpenAI’s ChatGPT and the explosion of generative AI that followed. For a company that once set the pace for innovation, those early months of the AI boom felt uncharacteristically reactive. By Google I/O 2023, however, the company had reset its course, unveiling the Gemini family of AI models and making artificial intelligence the heart of its strategy. AI was so central at that year’s Google conference for developers that people made viral compilations counting every time “AI” or “artificial intelligence” was mentioned—something Google playfully acknowledged at I/O 2024, even enlisting Gemini itself to keep score.

But at this year’s Google I/O, “AI” was no longer the headline phrase—“Gemini” was. This small change in language speaks volumes: for Google, Gemini isn’t just another model—it’s the core of its AI-first future, woven into everything the company builds.

Google is well into its Gemini Era. Sundar Pichai’s opening keynote felt like a victory lap, highlighting Google’s achievements over the past year and setting the stage for what comes next. The company now operates at a scale few can match: Gemini processed 9.7 trillion tokens per month a year ago; now it’s over 480 trillion, a leap made possible by Google’s custom TPUs, vast data centres, and fully integrated AI models.

This technological foundation supports Google’s new mission: to infuse every product and service, from search and productivity tools to developer platforms, with Gemini or other AI models. It’s not just about keeping up with rivals; it’s about quietly reshaping how billions of people interact with technology, often in ways so seamless that AI fades into the background.

Gemini—your new AI assistant

At Google I/O 2025, Gemini officially took over as Google’s universal AI assistant, going far beyond the original Google Assistant. By deeply integrating Gemini everywhere, Google is transforming its assistant from a digital tool into an ever-present companion, quietly helping users across every part of their digital lives.

Now integrated into Android, Chrome, and Workspace, Gemini drafts emails in your own style, summarises long threads, organises your inbox, and even schedules meetings—often anticipating your needs based on your data and habits.

Gemini isn’t just about text. Thanks to Project Astra, it’s now multimodal and real-time. With Gemini Live, users can point their phone’s camera at objects, landmarks, or documents to get instant answers or translations. The assistant can also see your phone screen, making it possible to help troubleshoot issues or provide context-sensitive tips in the moment.

And Gemini is on its way into the physical world: Google’s XR smart glasses, built with partners like Warby Parker and Samsung, hint at a future where AI is available at a glance, overlaying helpful information onto your environment.

Rethinking Search

In its Gemini Era, Google isn’t afraid to reinvent what made it famous in the first place—search. At I/O 2025, the company showed how the future of Search will look—no longer just a list of blue links, but a conversation powered by Gemini.

With AI Mode now available to everyone in the US, Google want to make the search experience feel more like a conversation. Users can ask complex questions in natural language and receive full, cited answers, and easily follow up with clarifying queries—all within a single conversation.

With the new Deep Search feature, Gemini breaks down complicated questions, digs through the web for relevant data, and presents personalised, detailed responses. Project Mariner takes this further, bringing in AI agents that browse websites, complete tasks, and even make purchases on your behalf. Instead of users jumping from page to page, Gemini now acts as an intermediary, doing the heavy lifting online so you don’t have to.

This new “agentic web” marks a dramatic shift—not just for Google, but for the internet as a whole. By putting Gemini at the centre, Google is redefining how people discover and interact with the web, raising big questions for publishers, advertisers, and users alike.

Next-Gen models

Let’s now talk about new features coming to Gemini itself.

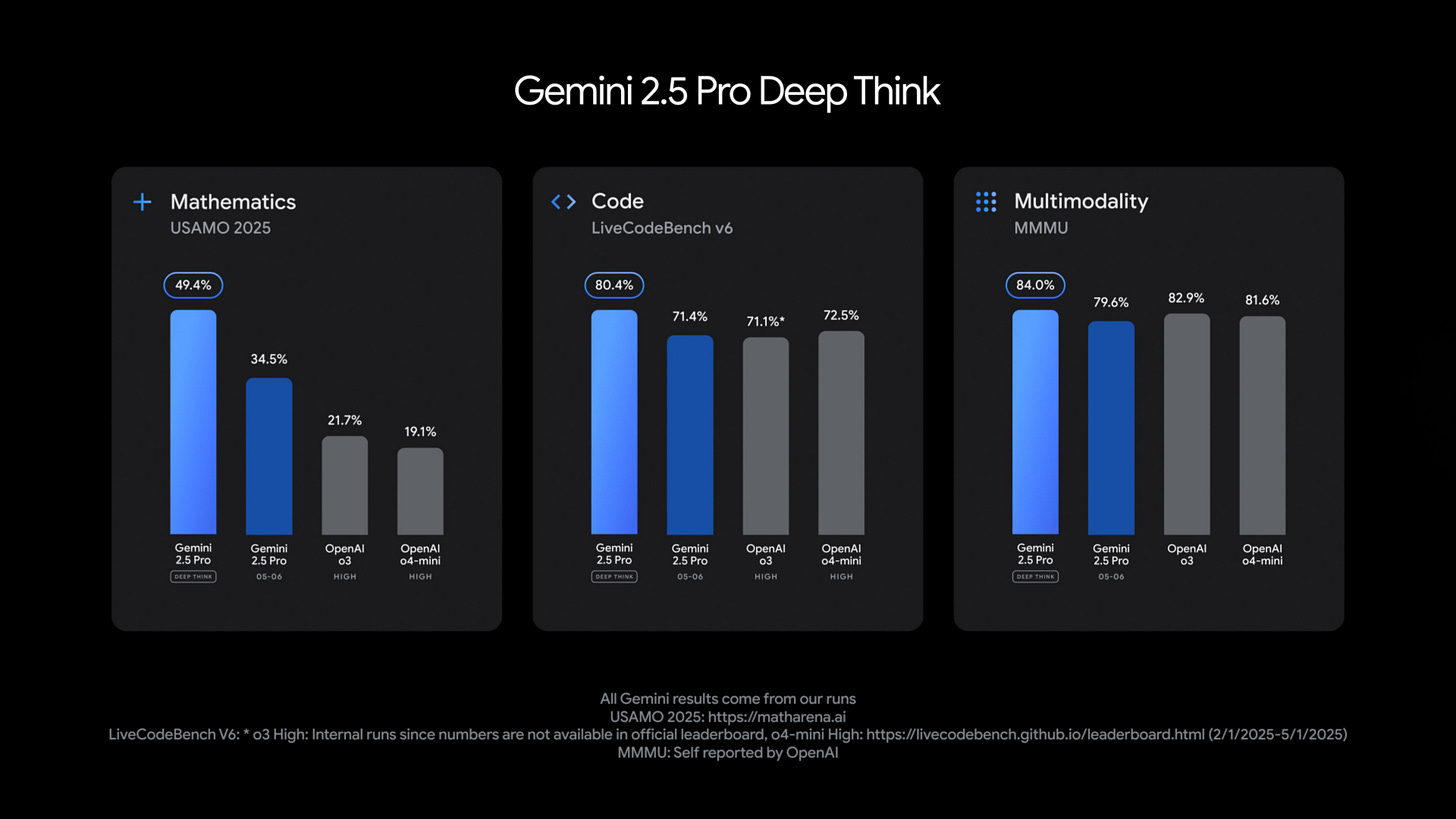

Although Google did not announce Gemini 3 yet, the company unveiled several major upgrades to its Gemini family at I/O 2025. The highlight is Deep Think for Gemini 2.5 Pro—an experimental reasoning mode that lets the model consider multiple hypotheses before answering. This approach boosts performance on coding and mathematical benchmarks, outperforming standard Gemini 2.5 Pro as well as OpenAI’s o3 and o4-mini models. For now, Deep Think is available only to trusted testers.

Another major reveal is Gemini 2.5 Flash, Google’s workhorse model designed for efficiency and speed. Now in preview and expected for production release in June, Flash scores as well as (or better than) OpenAI’s o4-mini, Anthropic’s Claude Sonnet 3.7, or DeepSeek R1, while being more cost-effective.

Meanwhile, for the open community, Google has Gemma 3n, a new model built on the same advanced architecture also powers the next generation of Gemini Nano. According to Google, Gemma 3n scores very well on Chatbot Arena Elo, beating GPT-4.1-nano and Llama 4 Maverick, and being only a few points behind Claude 3.7 Sonnet.

But perhaps the most intriguing announcement is Gemini Diffusion. Borrowing from diffusion models used in image generation, Gemini Diffusion applies this technique to text: rather than generating responses one token at a time, it refines solutions step by step from noise. The result is a much faster, smaller model that, according to benchmarks, matches the performance of Gemini 2.0 Flash-Lite—especially for coding and math tasks. I’d suggest keeping an eye on Gemini Diffusion—it may give Google a significant edge in the race for faster, smarter AI if it works as Google promises.

Imagen 4, Veo 3 and Flow

Google isn’t stopping at productivity and search—its newest generative AI media tools are reshaping what creators can do with images and video.

The new Imagen 4 creates detailed, high-res images in photorealistic and artistic styles, with improved text and texture rendering. Veo 3 generates smoother, lifelike video with native audio. And the brand new Flow combines Imagen, Veo, and Gemini so users can generate and edit videos simply by describing scenes or uploading assets, making high-quality creative work more accessible than ever.

Google AI Ultra

If any of Google’s new features caught your eye, there’s now a premium subscription just for that—Google AI Ultra, the company’s most advanced (and priciest) AI plan at $250 per month. Tailored for power users and businesses, the new plan unlocks the full suite of Google’s latest AI tools, including Flow, early access to experimental models in the Gemini app, and research agents like Project Mariner. As a bonus, YouTube Premium is bundled in—because why not at this point? For comparison, OpenAI offers ChatGPT Pro for $200 per month, and Anthropic’s Max plan costs $100 per month.

Invisible AI

Perhaps the biggest takeaway from Google I/O 2025 isn’t just what AI can do, but how seamlessly it now works behind the scenes. Google’s vision for Gemini isn’t about flashy features or constant reminders that you’re interacting with artificial intelligence. Instead, it’s about building a world where AI quietly powers your experiences.

This “invisible AI” marks a new chapter not just for Google, but for how billions of people will use technology in the coming years. As Gemini becomes the foundation of search, productivity, creativity, and communication, most users won’t need to understand the complexity beneath the surface. In fact, Google is betting they won’t even notice—they’ll just find that everything feels a little bit smarter, a little bit faster, and a lot more intuitive.

In Google’s Gemini Era, AI is about to be so deeply embedded that it becomes part of the background, essential, yet almost unnoticeable. As Sundar Pichai put it: “More intelligence is available, for everyone, everywhere.”

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

These contact lenses give people infrared vision — even with their eyes shut

Researchers in China have created the first contact lenses that enable infrared vision, thanks to embedded nanoparticles that convert near-infrared light into visible wavelengths. The lenses are lightweight, cost about $200 per pair, and do not require a power source. At this point, the lenses detect only intense infrared sources, limiting their sensitivity compared to traditional infrared goggles. Additionally, due to light being scattered by nanoparticles, the lenses produce blurry images, though this can be partly mitigated by additional corrective lenses. Potential applications include reading anti-counterfeit marks and aiding in near-infrared fluorescence surgery. The team plans further development to enhance image clarity and sensitivity.

🧠 Artificial Intelligence

Introducing Claude 4

Anthropic has announced and released Claude Opus 4 and Claude Sonnet 4 (notice the small change in naming convention), the next generation of its Claude models. Anthropic claims that Claude Opus 4 is the world’s best coding model, while Claude Sonnet 4 is a significant upgrade to Claude Sonnet 3.7. Anthropic reports excellent benchmark results, especially in coding tasks, where Claude 4 tops the charts. Alongside the new models, Anthropic also announced that Claude can now use tools during extended thinking and access local files, as well as new API features such as the code execution tool, the MCP connector, the Files API, and the ability to cache prompts for up to one hour. Another action taken by Anthropic was activating AI Safety Level 3 Protections as a precautionary and provisional measure, even though the company has not yet determined whether Claude Opus 4 has definitively passed the Capabilities Threshold that requires ASL-3 protections. That is a lot to unpack, and I recommend this excellent video from AI Explained, in which he analyses what Anthropic has brought to the table (including the 120-page-long Claude 4 System Card and a much shorter Activating AI Safety Level 3 Protections document), if you want to learn more about the Claude 4 models.

It has been rumoured for a while now that Jony Ive, the legendary Apple designer, is in talks with OpenAI about a secret AI project. This week, those rumours have become a reality, as OpenAI has announced that it has joined forces with Jony Ive. As the Wall Street Journal reports, OpenAI has acquired io, Ive’s company focused on designing AI-focused products, in an all-equity deal valued at $6.5 billion. Additionally, Ive and his design firm, LoveFrom (which will continue to operate independently), will now lead creative and design work at OpenAI.

Meta Is Delaying the Rollout of Its Flagship AI Model

The Wall Street Journal reports that Meta has postponed the release of its flagship AI model, Behemoth, amid internal doubts about its performance. Initially planned for April, the launch has been delayed to autumn or later due to technical setbacks. Meta’s latest models from the Llama 4 family have disappointed the open AI community. Additionally, it was later discovered that the models released to the public were different from those used in benchmarks, further fueling the negative perception. Meta executives are reportedly frustrated with the team behind Llama 4 and are considering management changes in its AI product group.

Microsoft’s CEO on How AI Will Remake Every Company, Including His

This deep-dive article sheds light on Microsoft’s strategy as it carves out a new role for itself in the evolving world of AI. It tells the story of how Microsoft is shifting its strategy to become a broad platform and distributor of AI, offering not only the latest models from OpenAI but also third-party models like DeepSeek-R1 and its own in-house AI models. Meanwhile, Microsoft is building its own models (in case the partnership with OpenAI falters), embracing AI commoditisation, and aggressively promoting Copilot. Yet, challenges remain: product inconsistency, competition with ChatGPT, internal cultural inertia, and the risk of workforce disruption. Nadella believes the economic benefits of AI will outweigh the risks, but acknowledges major changes are coming for Microsoft and for society.

GitHub Copilot coding agent in public preview

GitHub is launching the Copilot coding agent for Copilot Pro+ and Enterprise subscribers. The new agent allows users to delegate coding tasks, such as fixing bugs, extending tests, and improving documentation, directly to Copilot from the GitHub website, mobile app, or the CLI. The feature, rolling out now on iOS, Android, and CLI, aims to help developers focus on higher-impact work by automating low-to-medium complexity tasks.

xAI’s Grok 3 comes to Microsoft Azure

Grok, xAI’s controversial AI model, is coming to Azure. Microsoft has announced that Grok 3 and Grok 3 Mini will be available via the Azure AI Foundry platform. However, the Azure-hosted models will not be the same as those powering various features on X or those offered via the xAI API. They will be more restrained and will offer additional data integration, customisation, and governance capabilities.

Anthropic closes $2.5 billion credit facility as Wall Street continues plunging money into AI boom

Anthropic has secured a $2.5 billion revolving credit line over five years, backed by major financial institutions. “This revolving credit facility provides Anthropic significant flexibility to support our continued exponential growth,” said Krishna Rao, Chief Financial Officer at Anthropic. The company reported a revenue increase to $2 billion in the first quarter, with the number of customers spending over $100,000 annually increasing eightfold year-on-year. Anthropic is not the first AI company tapping into banks for extra resources—OpenAI previously secured a $4 billion revolving credit line in October last year, with an option to increase it by an additional $2 billion.

OpenAI: Introducing Stargate UAE

OpenAI has announced Stargate UAE, a major AI infrastructure project in Abu Dhabi and the first international expansion of its Stargate project. Developed with G42, Oracle, Nvidia, Cisco, and SoftBank, the data centre cluster will begin operations in 2026 with an initial 200MW and plans to reach 1GW. As the first initiative under OpenAI’s “OpenAI for Countries” programme, the partnership aims to support sovereign AI development and nationwide ChatGPT access in the UAE. Coordinated with the US government, the deal also includes UAE investment in US AI infrastructure.

Nvidia announces NVLink Fusion to allow custom CPUs and AI Accelerators to work with its products

Nvidia has launched the NVLink Fusion, an initiative aimed at expanding its proprietary interconnect technology to include non-Nvidia CPUs and custom AI accelerators in rack-scale systems. The NVLink interconnect offers up to 14 times the bandwidth advantage over PCIe and supports GPU-to-GPU and CPU-to-GPU communication. Key partners include Qualcomm, Fujitsu, MediaTek, Marvell, Alchip, Synopsys, and Cadence. Rivals such as AMD, Broadcom, and Intel are not part of the NVLink Fusion ecosystem and support the competing open UALink consortium.

SWE-1: Our First Frontier Models

Windsurf has launched a new family of AI models, dubbed SWE-1, designed to support the full spectrum of software engineering tasks, not just coding. The SWE-1 family includes three models: SWE-1, SWE-1-lite, and SWE-1-mini. According to Windsurf, SWE-1 is roughly on the level of Anthropic’s Claude 3.5 or 3.7 Sonnet in various coding tasks and benchmarks, and outperforms any open model. SWE-1 is available free to paid users for a promotional period; SWE-1-lite and SWE-1-mini are free for all users.

Devstral

Mistral introduces Devstral, a 24B parameter open-source agentic LLM for software engineering tasks. According to benchmark results provided by Mistral, the new model outperforms all open-source models on SWE-Bench Verified, as well as GPT-4.1-mini and Claude 3.5 Haiku. Devstral is light enough to run on a single RTX 4090 or a Mac with 32GB RAM, and is available on Hugging Face, Ollama, and other platforms.

▶️ Google's Jeff Dean on the Coming Transformations in AI (30:31)

In this interview, Google’s Chief Scientist Jeff Dean discussed topics such as the scaling of AI models, advances in specialised hardware, the rise of multimodal and agent-based systems, competition among leading AI players, AI’s impact on science and robotics, and the future integration of AI into everyday tools and computing infrastructure. It is worth listening to what Dean has to say, as he gives hints about the direction the AI industry might be heading in over the next few years.

OpenAI, Google and xAI battle for superstar AI talent, shelling out millions

It is a good time to be a star AI talent, as top tech companies are ready to spend millions to attract the best researchers and engineers. As Reuters reports, top OpenAI researchers can earn more than $10 million a year, while Google DeepMind is ready to offer compensation packages of up to $20 million annually. Top AI talent is key to success—Sam Altman has referred to these researchers as “10,000x” talents due to their significant impact.

Klarna used an AI avatar of its CEO to deliver earnings, it said

No one is safe from being replaced by an AI at Klarna, not even its CEO. During Klarna’s latest earnings update, the results were delivered by an AI-powered avatar of CEO Sebastian Siemiatkowski. While Klarna credits AI with boosting efficiency and user growth, the experiment underscores a broader industry debate: with AI models now capable of handling strategic decisions, the prospect of AI replacing top executives is becoming less of a joke and more of a looming reality, though challenges remain when it comes to unpredictable crises. Klarna is not the only company that has sent an AI avatar to deliver quarterly results—Zoom has done it too.

Groups of AI Agents Spontaneously Create Their Own Lingo, Like People

A new study revealed that AI agents can spontaneously develop shared language conventions, just like humans, through repeated pairwise interactions, even without global oversight or human input. Using a modified “name game”, researchers observed 200 AI agents, based on LLMs such as Claude or Llama, gradually converging on common linguistic choices. The findings have important implications for AI safety, suggesting these systems may be susceptible to adversarial influence and societal bias propagation.

🤖 Robotics

Amazon’s Zoox to start testing AVs in Atlanta, following Waymo

Zoox, Amazon’s autonomous vehicle unit, will begin testing self-driving vehicles in Atlanta, Georgia, marking its seventh US city for autonomous trials. The company has completed the initial mapping phase in the city and plans to start autonomous driving tests later this summer. The announcement comes shortly after Waymo and Uber revealed similar testing plans in Atlanta. Despite recent software-related recalls, Zoox aims to launch public robotaxi rides in San Francisco and Las Vegas by the end of the year.

▶️ Humanoid Robots: From The Warehouse to Your House (1:01:31)

In this video, Jonathan Hurst, co-founder and CTO of Agility Robotics, shares the story of how Digit, Agility Robotics’ humanoid robot, came to be and how, by trying to build a useful robot rather than trying to build a humanoid robot, the company ended up building a humanoid robot. Hurst also explains how the embodied intelligence controlling the robot works and how Agility Robotics ensures their robots are safe. It is an insightful talk followed by interesting questions from the audience.

Tesla’s head of self-driving admits ‘lagging a couple years’ behind Waymo

In a rare admission, Tesla’s head of self-driving, Ashok Elluswamy, has acknowledged that the company is lagging behind Waymo by “a couple of years” in autonomous vehicle development. The admission contrasts with CEO Elon Musk’s long-standing claims that Tesla leads in autonomy. While Waymo already operates Level 4 autonomous services in cities like Austin, Tesla remains at Level 2 but plans to launch a Level 4 pilot programme in Austin next month. Elluswamy cited Tesla’s cost-efficient approach as a key advantage, asserting that the company delivers comparable quality at a lower price.

Optimus is learning many new tasks

In a new video, Tesla shows how good Optimus is at mundane, daily tasks such as taking the trash out into a bin, stirring a pot or using a vacuum cleaner. I am linking to a tweet from Milan Kovac, one of the engineers working on Optimus, who more context about the video and what Tesla’s engineers are currently working on.

Cartwheel Robotics Wants to Build Humanoids That People Love

Cartwheel Robotics plans to stand out in a crowded humanoid robotics space by focusing on building emotionally intelligent, friendly humanoid robots for home and companionship. As Scott LaValley, the founder of Cartwheel and a former engineer at Boston Dynamics and Disney, explains, the company’s goal is to develop “a small, friendly humanoid robot designed to bring joy, warmth, and a bit of everyday magic into the spaces we live in. It’s expressive, emotionally intelligent, and full of personality—not just a piece of technology but a presence you can feel.” While initially targeting museums and science centres, Cartwheel's long-term vision is to bring general-purpose, emotionally resonant robots into everyday homes.

Giant Robotic Bugs Are Headed to Farms

Robots come in all shapes and sizes, but I don’t think I have ever seen a giant robotic centipede. Created by Ground Control Robotics, this interesting-looking robot is designed with agricultural crop management in mind, where its “robophysical” and modular design allows it to navigate complex terrain and perform tasks such as scouting and weed control with minimal human intervention. Currently in pilot testing in Georgia, the robots provide a cost-effective, chemical-free alternative to traditional weed control, with the potential to transform labour-intensive farming. Ground Control Robotics also envisions future applications in disaster response and military operations.

🧬 Biotechnology

Regeneron to acquire 23andMe for $256 million

Regeneron Pharmaceuticals will acquire key assets of 23andMe for $256 million following a successful bid in a bankruptcy auction, making 23andMe a wholly owned subsidiary under Regeneron. However, 23andMe will continue to operate independently in its domain. The acquisition includes major business lines and assets of 23andMe but excludes its Lemonaid Health unit. Regeneron commits to upholding privacy, security, and ethical standards for customer data.

Mice grow bigger brains when given this stretch of human DNA

A new study has revealed that inserting a human-specific DNA snippet, known for its key role in the development and growth of neural cells, into mice has led to larger brain development and increased their brain size by 6.5%. While there is no evidence yet as to whether larger brains in mice improve cognitive functions, the research offers fresh insight into how genetic changes may have driven the expansion and complexity of the human brain.

💡Tangents

We 3D-Printed Luigi Mangione’s Ghost Gun. It Was Entirely Legal

This article explores the fascinating and somewhat terrifying world of 3D-printed guns, such as the one Luigi Mangione allegedly used to kill UnitedHealthcare CEO Brian Thompson. It documents the process of making the gun and explains the loophole in some US states that makes such guns legal. The author also notes how much the design of 3D-printed guns has evolved in the last decade since he constructed his first 3D-printed gun as part of an investigative report.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"