New AI Models That Aren’t GPT-5 - Sync #531

Plus: OpenAI eyes $500B valuation; Montana becomes a hub for biohacking; OpenAI, Google and Anthropic win US approval for civilian AI contracts; new robot-dog from Unitree; and much more!

Hello and welcome to Sync #531!

This week, OpenAI has finally released the long-anticipated GPT-5. It will take some time to unpack and put OpenAI’s announcements into the right perspective, and I’ll have a separate article analysing GPT-5 early next week. In the meantime, I’d like to highlight other notable models released this week, from gpt-oss to Genie 3 and new image models.

Elsewhere in AI, OpenAI is reportedly in talks for a share sale that would value the company at $500 billion. Additionally, OpenAI, together with Google and Anthropic, has won US approval for civilian AI contracts. In other news, Tesla is disbanding its Dojo team, Vogue’s use of an AI-generated fashion model has sparked controversy, and top AI models have faced off in a chess tournament.

In robotics, Unitree has unveiled a new robot dog, Marques Brownlee has shared his experiences riding in both a Tesla Robotaxi and a Waymo, and we explore how swarm robotics could transform manufacturing.

This week’s issue of Sync also features the story of a former Royal Marine who spent decades working with artificial limbs and, after an accident, became a patient himself. We also examine the science of regrowing human teeth, how Montana is becoming a hub for biohackers, and meet Claude fans who threw a funeral party for a retired AI model.

Enjoy!

New AI Models That Aren’t GPT-5

GPT-5 dominated the headlines this week, but it wasn’t the only major release. Here are the other AI models launched in its shadow—each worth your attention.

gpt-oss—OpenAI’s first open models since 2019

After years of waiting, OpenAI has finally released new open models—the first since GPT-2 in 2019. (The company did release Whisper, an open speech recognition model, in 2022, but no open GPT models until now.)

Growing pressure—from those questioning the “open” in OpenAI’s name and from competition by Meta and Chinese labs—pushed the company to commit to an open release. This week, OpenAI delivered with gpt-oss.

gpt-oss is an open reasoning model that comes in two variants:

gpt-oss-20b—a 20B parameters model which fits into 16GB of memory, aimed at on-device use, local inference, and rapid iteration without costly infrastructure

gpt-oss-120b—the more powerful version, with 120B parameters and requiring 80GB of memory

Both models offer a 128k token context window and use a sparse Mixture-of-Experts architecture that activates only a fraction of parameters per token—4.4% for the 120B and 17.2% for the 20B—offering high efficiency and speed without compromising much on quality.

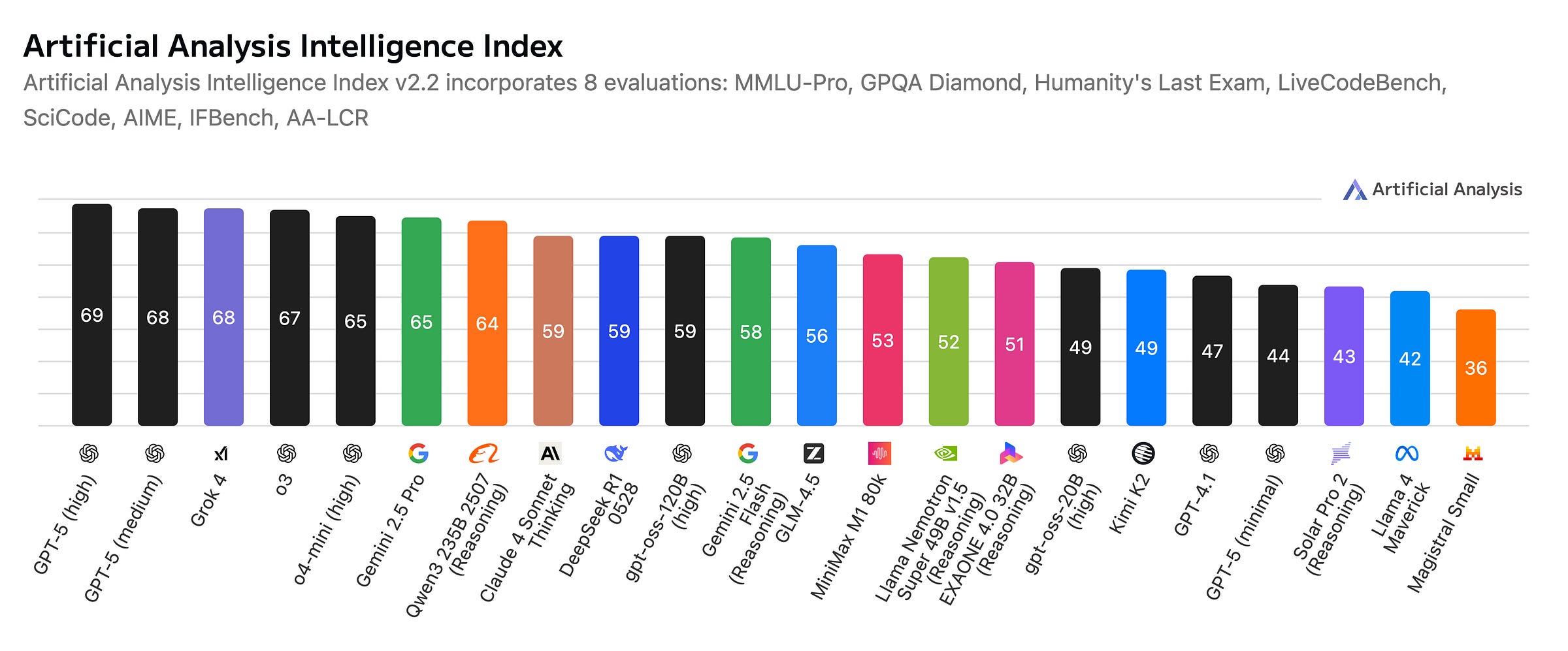

Independent testing from Artificial Analysis ranks gpt-oss-120b as the most capable American open-weights model—trailing DeepSeek R1 and Qwen3 235B in intelligence but surpassing them in efficiency. OpenAI’s own benchmarks show gpt-oss-120b performing near-parity with o4-mini on core reasoning tasks, while the 20B variant matches o3-mini on common benchmarks. Keep in mind, though, that OpenAI only makes comparisons to its own models and does not report on the competitor’s results.

In addition to efficiency, OpenAI has emphasised safety. The models were trained on a heavily filtered dataset focused on STEM, coding, and general knowledge, with significant safety filtering to reduce harmful content.

Both gpt-oss-120b and gpt-oss-20b are available for download from Hugging Face and on a wide range of other platforms such as Azure, AWS, Ollama, llama.cpp, and more. You can also try out both models here without the need to install them locally.

From a cost perspective, the models are highly competitive. API providers offer median pricing around $0.15/$0.69 per million input/output tokens for the 120B and $0.08/$0.35 for the 20B—roughly ten times cheaper than OpenAI’s proprietary reasoning models, according to Artificial Analysis. This could make gpt-oss particularly attractive for researchers, startups, and enterprises looking to deploy capable reasoning models without massive infrastructure costs.

OpenAI also introduced OpenAI Harmony, a new open-source format for prompt templates designed for complex, multi-role conversations and advanced tool usage. Harmony uses structured roles, channels, and dedicated token IDs to make agentic workflows more reliable and easier to integrate.

On paper, gpt-oss looks strong—scoring well on benchmarks, just behind the top Chinese models, and leading among Western open models. But its real-world performance remains unknown, and it may take weeks or months for the AI community to judge. One analysis raises an interesting point: gpt-oss may be optimised for benchmarks in ways that don’t fully translate to practical applications. It also suggests the model could be trained on synthetic or highly curated datasets—much like Microsoft’s Phi models—to ensure safety and protect OpenAI from being associated with people misusing gpt-oss.

OpenAI has not confirmed whether it will release updated versions, though it has launched a $500k Red Teaming Challenge to uncover flaws in gpt-oss-20b, which could feed into updates or safety refinements.

DeepMind Genie 3—a state-of-the-art world model

Beyond Gemini, DeepMind has been advancing research in many areas of AI, including world models—AI systems that simulate the real world, capable of generating realistic scenarios and planning actions without direct interaction.

Genie 3 is the latest addition to DeepMind’s family of world models. It is a general-purpose system that generates interactive worlds from simple text prompts, running in real time at 720p and 24 FPS. DeepMind says it understands physics well enough to replicate natural phenomena like water and lighting, and can simulate a wide range of settings—from natural ecosystems to historical locations and fictional realms.

This is also the first DeepMind world model to allow real-time interaction. Genie 3 remembers changes made to a scene and preserves environmental coherence for several minutes, with visual memory extending as far back as one minute. Additionally, users can now change the virtual world through promptable world events, modifying the environment mid-interaction—for example, changing the weather or introducing new objects—opening the door to “what-if” scenarios for both creative exploration and AI training.

Despite its impressive capabilities, DeepMind acknowledges the model’s limitations. Challenges include the limited range of actions an agent can take, the inability to simulate multiple independent agents in a shared world, and the lack of perfect geographic accuracy for real locations. Continuous interaction is currently capped at a few minutes rather than extended, hours-long sessions.

For now, Genie 3 is being released as a limited research preview for selected academics and creators, part of DeepMind’s effort to gather feedback and ensure responsible development. The company sees it as a key step towards richer, open-ended environments that could help train both AI systems and humans—moving closer to the long-term goal of achieving AGI.

Genie 3 represents a step forward in creating interactive virtual worlds. Potential applications include entertainment—where world models could generate entire games on demand—as well as training robots, autonomous systems, and professionals in realistic simulated environments.

Claude Opus 4.1—a small but welcome upgrade

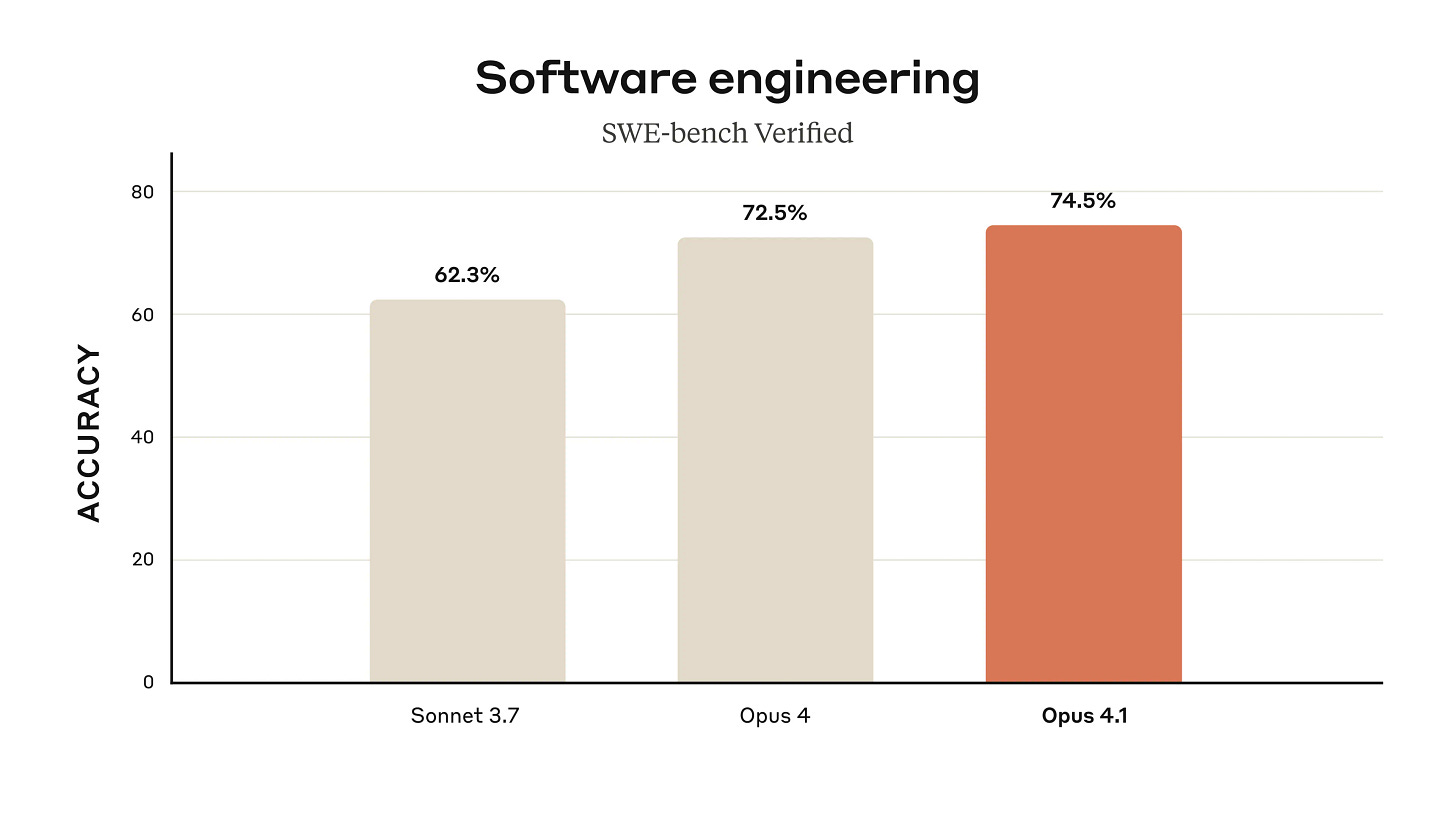

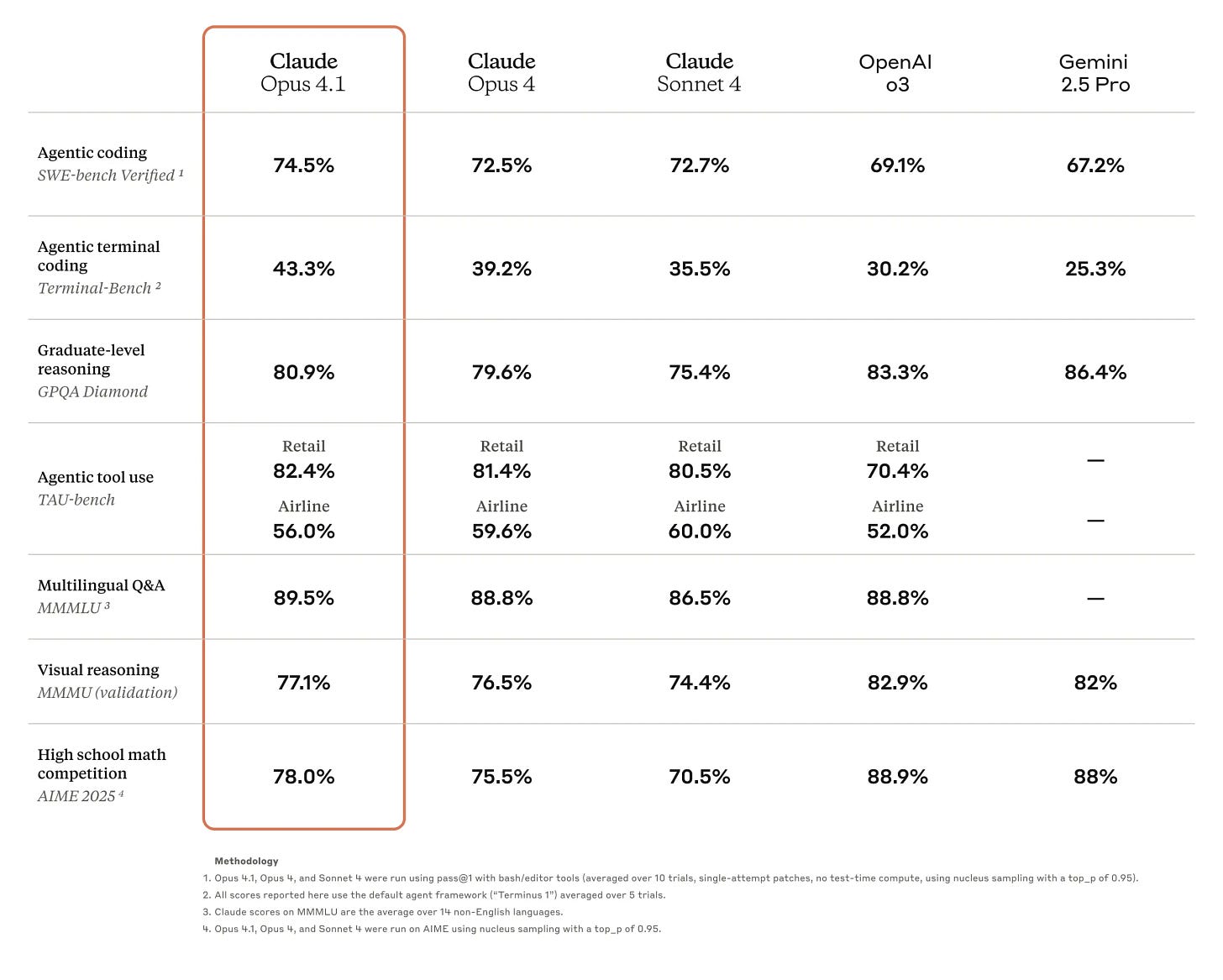

Anthropic has released Claude Opus 4.1, an updated version of its flagship model from the Claude family. According to benchmark results provided by Anthropic, the new model offers a slight improvement over its predecessor, an incremental change rather than a generational change, worthy of a 4.1 name.

Like its predecessor, Claude Opus 4.1 offers a 200K token context window and is available to paid Claude users, in Claude Code, and via API through Amazon Bedrock and Google Cloud’s Vertex AI, with unchanged pricing.

Additionally, Anthropic hinted in the announcement post that a more significant update could arrive in the coming weeks. Could this be Claude 5?

Qwen-Image—a leading Chinese image model

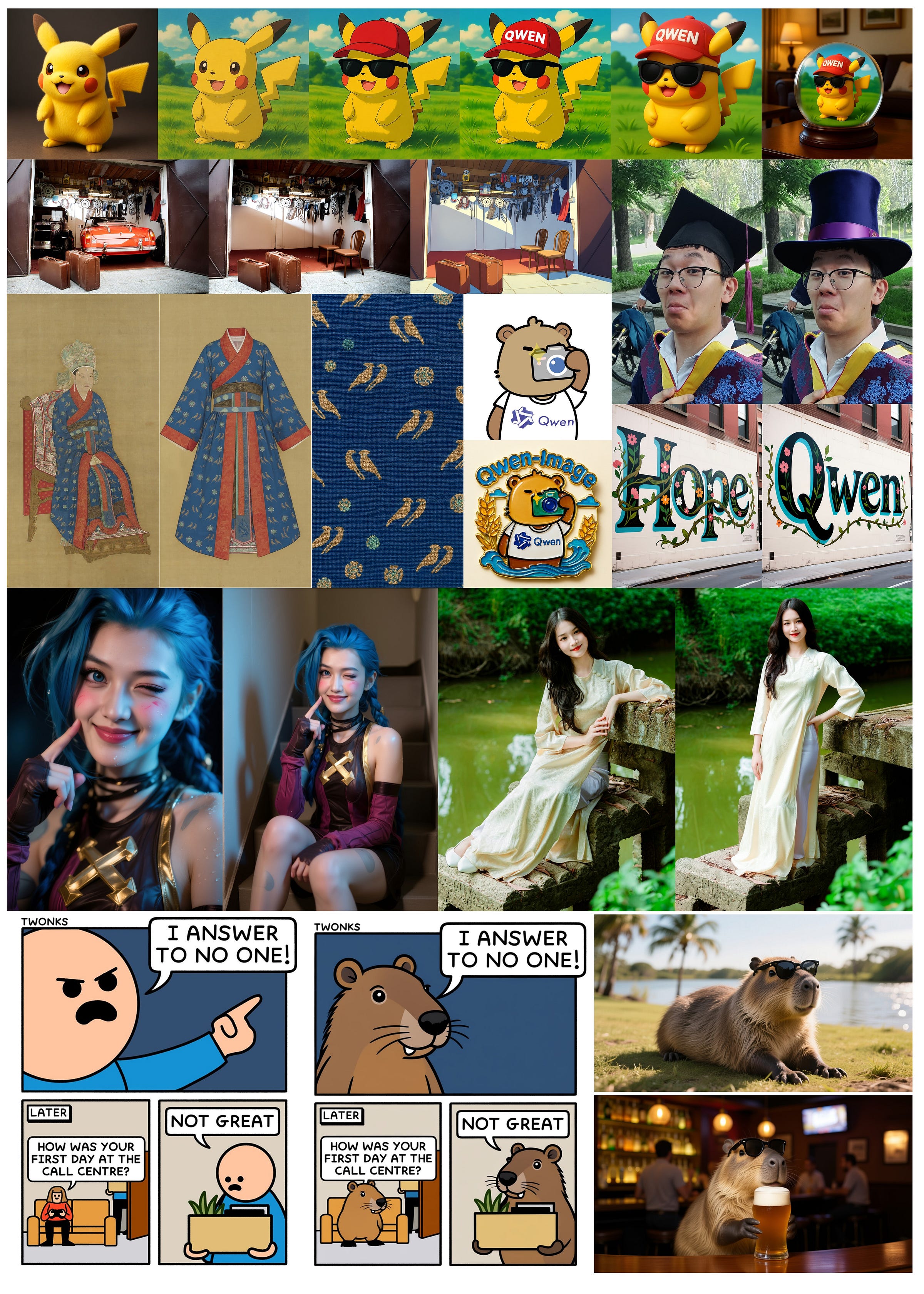

Following the release of Qwen3 and Qwen3-Coder, Alibaba introduced Qwen-Image, a text-to-image model and the latest addition to the Qwen family of open models.

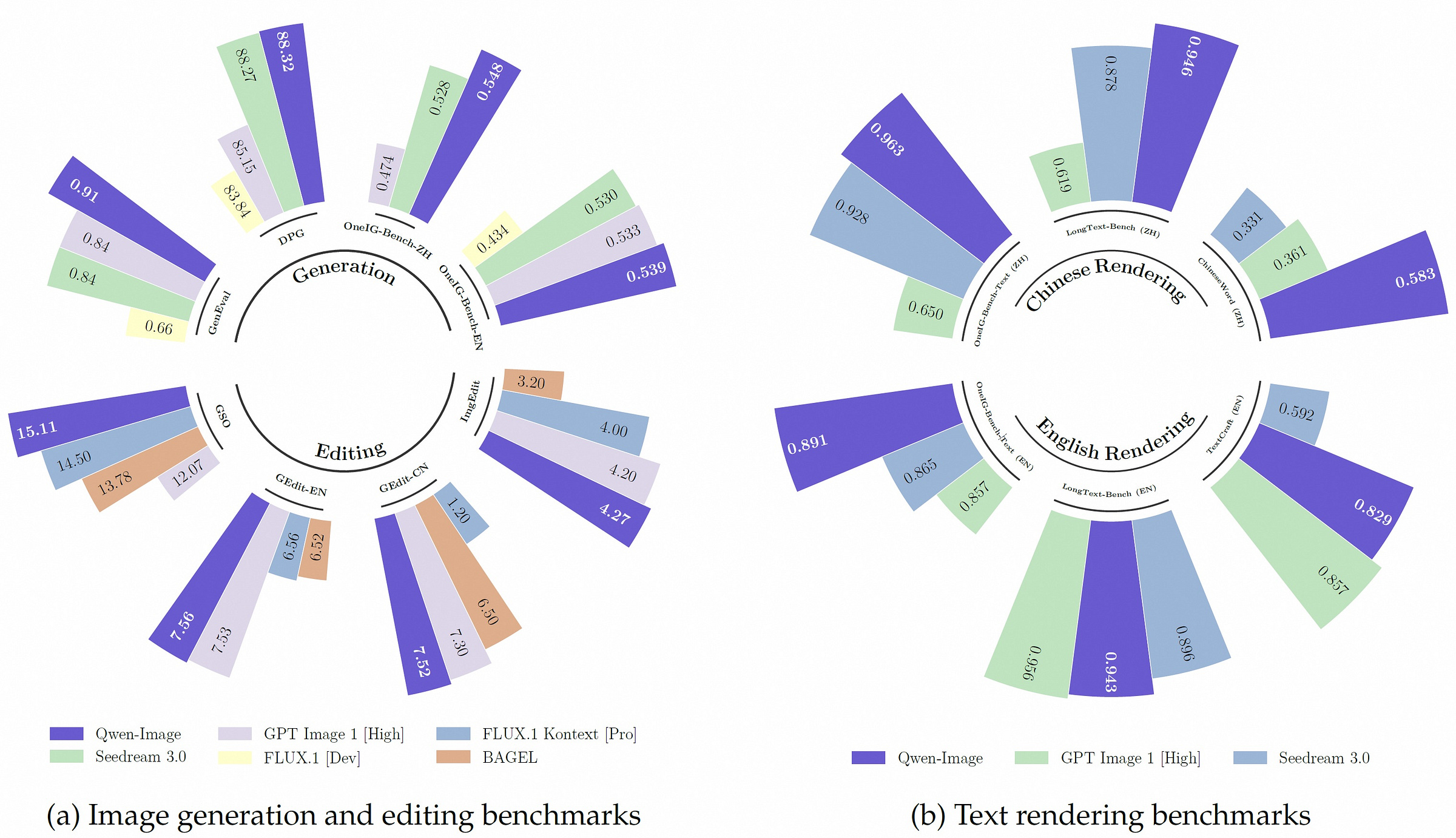

Benchmarks provided by Alibaba show Qwen-Image scoring high in all benchmarks, matching or, in many cases, exceeding top open and closed image generation models.

The release notes and technical report put extra emphasis on Qwen-Image’s standout text rendering abilities in both Chinese and English, producing accurate multi-line and full paragraphs of text. The model also excels in image editing, supporting style transfer, object insertion/removal, text editing, detail enhancement, and human pose manipulation.

Beyond that, Qwen-Image handles a wide range of artistic and photorealistic styles, and offers image understanding tasks like object detection and depth estimation, and includes a super-resolution mode.

Qwen-Image is released under the Apache 2.0 licence and available for download on Hugging Face and Modelscope.

FLUX.1 Krea—an opinionated image model

Last but not least, I’d like to highlight FLUX.1 Krea—an open image model with an interesting approach to solving the issue of generating images with the “AI look”.

The team behind FLUX.1 Krea set themselves a goal of creating AI-generated images that feel authentic, avoiding the over-polished, overly bright, waxy-skin aesthetic that has become a hallmark of many current models like Midjourney and other image models. The team identified that this “AI look” is often the by-product of industry practices that focus heavily on technical correctness—accurate anatomy, prompt adherence, or object counts—while sidelining the subtler, subjective qualities of style and atmosphere.

To counter this, they partnered with Black Forest Labs and built on flux-dev-raw, a large, pre-trained diffusion transformer with broad visual knowledge and no ingrained AI aesthetic.

The post-training process had two stages:

Supervised Fine-Tuning (SFT) on a hand-curated dataset reflecting the desired aesthetic.

Reinforcement Learning from Human Feedback (RLHF) to refine results further.

The team found that fewer than one million high-quality, carefully chosen examples outperformed much larger but less targeted datasets. They also avoided a “global preference” approach—blending multiple aesthetic tastes—which they argued produces bland, averaged-out images.

The result is a model that trades some technical precision for a more natural, grounded feel that doesn’t have the “AI look.” Particularly in portraiture, where lighting, skin texture, and composition feel real rather than perfect. Even in architectural and street scenes, FLUX.1 Krea leans towards a candid, photographic style over a hyper-rendered look.

FLUX.1 Krea is an interesting model that demonstrates the potential of opinionated models in generative AI. In this sense, FLUX.1 Krea isn’t just an alternative model—it’s a statement that the future of AI art may belong to tools that lean into particular styles, allowing creators to work with models that share their style and sensibilities instead of flattening everything into the same “AI look.”

You can try out FLUX.1 Krea for yourself here. The model is also available on Hugging Face and GitHub.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

Longevity Firms Push Montana to Become Hub for Biohacking, Experimental Treatments

Montana, long known for attracting tourists with its fly fishing and hiking, is now drawing interest from biotech firms thanks to an expanded “Right to Try” law that lets any patient, not just those with terminal illnesses, access experimental drugs, therapies and devices. Supporters, including Robert F. Kennedy Jr., say the law empowers patients and could turn the state into a medical tourism hub, while critics warn it prioritises providers over patient safety and risks exposing people to ineffective or harmful therapies.

He worked with artificial limbs for decades. Then a lorry ripped off his right arm. What happened when the expert became the patient?

Here is a story of Jim Ashworth-Beaumont, a former Royal Marine and prosthetics specialist who lost his right arm in a catastrophic cycling accident in 2020 and found himself in need of a prosthetic arm. After years of using uncomfortable socket-based prosthetics, he privately funded surgery to anchor a titanium implant directly into his bone, allowing him to attach high-tech myoelectric arms with greater comfort, control and mobility. Ashworth-Beaumont’s story highlights both the life-changing potential of the technology and the inequalities in access to it.

The science behind regrowing missing teeth

Soon, instead of getting dental implants, we might simply grow new teeth. A groundbreaking Japanese drug that could enable people to regrow missing or damaged teeth has entered clinical trials, with hopes for public availability by 2030. The treatment works by blocking the USAG-1 protein, which limits tooth growth, and has already shown success in mice. Initial trials will focus on young children with the rare hereditary condition anodontia, while other research teams worldwide are exploring alternative regeneration methods, including lab-grown teeth and stem cell-based enamel production.

🧠 Artificial Intelligence

OpenAI, Google and Anthropic Win US Approval for Civilian AI Contracts

The US General Services Administration (GSA) is adding OpenAI, Google, and Anthropic to its list of approved AI vendors for civilian federal agencies. This move will accelerate AI adoption by enabling agencies to procure tools such as ChatGPT, Gemini, and Claude through the Multiple Award Schedule, avoiding lengthy contract negotiations. The GSA emphasises that it is not “picking winners” but aims to offer a diverse range of AI tools for varied use cases, with additional companies expected to join later (the first three are simply further along in the procurement process).

OpenAI in Talks for Share Sale at $500 Billion Valuation

According to Bloomberg, OpenAI is in early discussions for a secondary stock sale that would allow current and former employees to sell shares at a valuation of about $500 billion. The sale could be worth billions of dollars and would represent a sharp increase from the company’s previous $300 billion valuation in a $40 billion financing round led by SoftBank. Existing investors, including Thrive Capital, have expressed interest in purchasing employee shares. The move is seen as a way to reward and retain staff, particularly as OpenAI has recently lost several research team members to Meta, which has been offering nine-figure pay packages to recruit talent for its “superintelligence” AI team.

Google Jules is now available for everyone

Jules, Google’s AI coding assistant powered by Gemini 2.5 Pro, is officially out of beta and publicly available. According to the announcement, during the beta period, Jules helped thousands of developers tackle tens of thousands of tasks, resulting in more than 140,000 publicly shared code improvements. Jules is available as part of the Google AI Pro and Ultra plans, with Google also offering a free introductory plan for anyone who wants to try it out.

OpenAI open weight models now available on AWS

Amazon announced that AWS will offer OpenAI models via Amazon Bedrock and Amazon SageMaker AI for the first time. However, OpenAI’s flagship models like GPT-5 are not coming to AWS. Instead, AWS will provide OpenAI’s latest open-weight models — gpt-oss-120b and gpt-oss-20b.

Kaggle Game Arena

There are many benchmarks designed to measure the intelligence of AI models, and Kaggle has added a new one called Game Arena. The idea is to pit leading models from the likes of Google, OpenAI and Anthropic against each other in various games. In the first contest, Gemini 2.5 Pro and Flash, o3 and o4 mini, DeepSeek R1, Kimi K2, Claude 4 Opus, and Grok 4 competed to determine which model is best at chess. o3 won the overall tournament, with Grok 4 taking second place and Gemini 2.5 Pro coming third (full results are available here). If you are interested in how the games unfolded, GothamChess has three videos analysing how bad these models are at playing chess.

Tesla Disbands Dojo Supercomputer Team, Unwinding Key AI Effort

Tesla is disbanding its Dojo team, ending its in-house supercomputer project for driverless-vehicle technology, Bloomberg reports. Dojo chief Peter Bannon is leaving the company, while about 20 former team members have joined start-up DensityAI, founded by ex-Tesla executives. The remaining staff are being reassigned within Tesla, which will now rely more on partners such as Nvidia and AMD for computing and Samsung for chip manufacturing.

The uproar over Vogue’s AI-generated ad isn’t just about fashion

Vogue recently sparked controversy by publishing a Guess ad featuring an AI-generated model, igniting debate over the role of AI in high fashion. While AI models offer brands a fast, cost-effective, and flexible way to create product imagery, their use threatens the livelihoods of human models and raises concerns about “robot cultural appropriation,” where diversity is simulated rather than authentically represented. Although AI modelling remains experimental for many brands, Vogue’s decision could accelerate its mainstream acceptance.

World’s largest-scale brain-like computer with 2 billion neurons mimics monkey’s mind

Chinese researchers have unveiled Darwin Monkey, the world’s first neuromorphic brain-like computer built on dedicated neuromorphic chips, capable of mimicking a macaque monkey’s brain. The system uses 960 chips to support over 2 billion spiking neurons and more than 100 billion synapses. Consuming about 2,000 watts, Darwin Monkey can run the DeepSeek brain-like large model for logical reasoning, content generation, and mathematical problem-solving, as well as simulate animal brains of various sizes, from C. elegans to zebrafish, mice, and macaques.

Claude Fans Threw a Funeral for Anthropic’s Retired AI Model

On 21 July, around 200 people gathered in a warehouse in San Francisco for a funeral—not for a human, but for a retired AI model, Claude 3 Sonnet. The event featured mannequins representing various Claude models, eulogies delivered by attendees, and personal stories of how the model had influenced life choices (including dropping out of college to move to San Francisco). The gathering showcased the unusually emotional and devoted community surrounding Anthropic’s models—a fandom that, depending on your perspective, is either fascinating or a little unhinged.

AI is eating the Internet

This article explores how the Internet’s “Faustian bargain” (ads in exchange for free content) is crumbling in the age of AI, and how the Internet is transforming into a more fragmented, pay-gated and AI-driven landscape. However, there is some hope, as the article also envisions the emergence of a “cosy Internet,” in which users retreat to private, trusted spaces (newsletters, private forums) with verifiable human-made content to avoid bot-generated noise.

▶️ I built a private AI mini-cluster with Framework Desktop (20:01)

In this video, Jeff Geerling is building a mini AI cluster from four freshly released Framework Desktop computers and proves that connecting more machines to increase AI computing power is not as easy as it seems.

▶️ We Keep Falling For This... (10:31)

There is a new crop of command-line AI apps, such as Claude Code or OpenAI Codex CLI, that bring AI models into a terminal to make AI-assisted coding easier. However, these new apps also introduce fresh vulnerabilities—such as the bug described in this video, which tricks the Gemini CLI app into executing potentially malicious commands.

🤖 Robotics

▶️ Unitree A2 Stellar Hunter (2:06)

Unitree has unveiled the A2, its latest robot dog. Compared with its predecessor, the B2, the A2 is lighter and features improved perception capabilities, with two lidars (one at the front and one at the rear) and an upgraded camera. In the video promoting the robot, A2 is seen doing some impressive feats of acrobatics, climbing rugged terrain with ease, carrying a 37 kg load for over three hours, and more. Unitree has not disclosed the price of the new robot.

▶️ Investigating the Tesla Robotaxi (17:13)

In this video, Marques Brownlee shares his experiences riding in both a Tesla Robotaxi and a Waymo, from hailing the cars to being driven to his destination. Marques found both services to be good (although Waymo felt more polished), albeit with some hiccups and things a human driver would not do.

Amazon’s Zoox Taxi Is Clear To Operate Without A Steering Wheel Or Pedals

Zoox has received an exemption from the US National Highway Traffic Safety Administration to operate 64 steering wheel-less electric pods for demonstration purposes, following the closure of a 2022 probe into safety compliance The approval excludes certain retired 2023 models, applies only to vehicles already under investigation, and does not allow commercial service.

These drones drop burning balls in the forest to control wildfires

Drone Amplified is transforming wildfire prevention with its IGNIS drones, which carry out controlled burns to remove hazardous dry vegetation before wildfires can spread. This method is cheaper and less risky than helicopter operations, allowing firefighters to “fight fire with fire” in a controlled manner within pre-programmed zones, guided by thermal cameras and operated safely from the ground. Beyond wildfire mitigation, the technology is also being adapted for environmental and safety purposes, including mosquito control in Hawaii and managed avalanches in Alaska.

Swarm robotics could spell the end of the assembly line

This article explores how manufacturing is poised for a major transformation thanks to swarm robotics, a system where autonomous robots use AI to self-program, coordinate in real time, and build large structures with minimal human oversight. The technology promises faster, cheaper, and more precise production, overcoming the inflexibility, high costs, and space demands of assembly lines that have dominated since the early 20th century.

🧬 Biotechnology

Synthetic E. coli with compressed DNA outsmarts viruses in radical genetic leap

Scientists at the MRC Laboratory of Molecular Biology in Cambridge, UK, created a synthetic E. coli strain named Syn57 with only 57 codons instead of the natural 64, making it the most compressed genetic code ever built. By removing redundant codons, the team freed space to add new biochemical functions, opening the door to future organisms that can create unnatural materials, virus-resistant biofactories, and advanced polymers.

💡Tangents

Apple increases US commitment to $600 billion, announces American Manufacturing Program

Apple has announced an additional $100 billion investment in the United States, bringing its total planned US spending to $600 billion over the next four years. Central to this push is the new American Manufacturing Program, aimed at expanding domestic production and creating an end-to-end US silicon supply chain. Working with partners including Corning, TSMC, Texas Instruments, Samsung, and GlobalFoundries, Apple will produce key components, such as advanced chips, in the United States. The program also includes plans to create 20,000 new jobs, mainly focused on R&D, silicon engineering, software development, and AI and machine learning.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"