The New York Times sues OpenAI and Microsoft - Weekly News Roundup - Issue #447

Plus: New York Times sues OpenAI and Microsoft; OpenAI is in talks to raise new funds at valuation of $100B+; a robot beats humans in labyrinth marble game; and more!

Welcome to Weekly News Roundup Issue #447. I expected a quiet holiday season, but then The New York Times filed a strong copyright infringement lawsuit against OpenAI and Microsoft, which is the main story of this week.

In other news, OpenAI is reportedly in talks to raise new funds at a valuation exceeding $100 billion, and a robot has outperformed humans in a labyrinth marble game. In the biotech sector, researchers have discovered that bacteria can store memories and transmit them to subsequent generations.

This is also the last issue of the Weekly News Roundup in 2023. This year the newsletter went through a lot of changes, including moving to Substack, rebranding, and introducing articles. The newsletter grew massively in 2023 and is on track to grow even more in 2024. I have several articles lined up for the next year exploring what is possible at the bleeding edge of technology and how these advancements can affect how we live, so stay tuned for that!

I want to give a big shoutout and say thank you to Michael from

whose advice helped me bring the newsletter to the place it is today.I also want to express my gratitude to you, the reader, for your support and for motivating me to continually refine my craft and deliver top-quality, informative content.

Lastly, I’d like to share my New Year’s Resolution for 2024. Happy New Year!

The New York Times has filed a lawsuit against OpenAI and Microsoft for copyright infringement, claiming that OpenAI’s large language models GPT-3.5 and GPT-4 were trained using the newspaper's content without authorization, potentially causing billions in damages.

The lawsuit, filed in Manhattan, alleges that OpenAI is affecting Times revenue streams needed to compensate staff and undermines the newspaper’s journalistic investments. "By providing Times content without The Times’s permission or authorization, Defendants’ tools undermine and damage The Times’s relationship with its readers and deprive The Times of subscription, licensing, advertising, and affiliate revenue," the suit alleges. The lawsuit further alleges that OpenAI's software can circumvent the Times' paywall and misattribute false information to the newspaper, damaging not only its revenue streams but also its reputation as a credible news source. As an example of the latter, the lawsuit includes a specific accusation that a GPT model falsely claimed The New York Times published an article linking orange juice to Non-Hodgkin’s Lymphoma, which the newspaper denies ever publishing.

The lawsuit demands significant damages (measured in billions of dollars) and the destruction of AI models using Times content. While Microsoft remains silent, OpenAI expressed disappointment, citing constructive ongoing talks with the Times.

The full text of the lawsuit The New York Times filed against OpenAI and Microsoft is available here.

This is not the only lawsuit OpenAI faces. I have covered some of these lawsuits here, here, and here. There is even an entire website tracking copyright infringement lawsuits filed against OpenAI. These lawsuits represent a broader trend of artists and writers suing developers of generative AI models for copyright infringements. Some of these cases have been resolved, often not in favour of the artists or writers. In November, a judge dismissed most of Sarah Silverman's lawsuit against Meta for using copyrighted books to train its AI model without authorization. In a separate case involving the popular image generators Midjourney and Stability AI, the company that developed the technology powering Midjourney, the judge reduced a lawsuit filed by visual artists against Stability AI, Midjourney, and DeviantArt. The lawsuit accused these companies of misusing artists' copyrighted work in their AI systems. While claims against Midjourney and DeviantArt were dismissed, the judge permitted artist Sarah Andersen to continue her key claim against Stability AI, leading her to file a new lawsuit with seven other artists at the end of November.

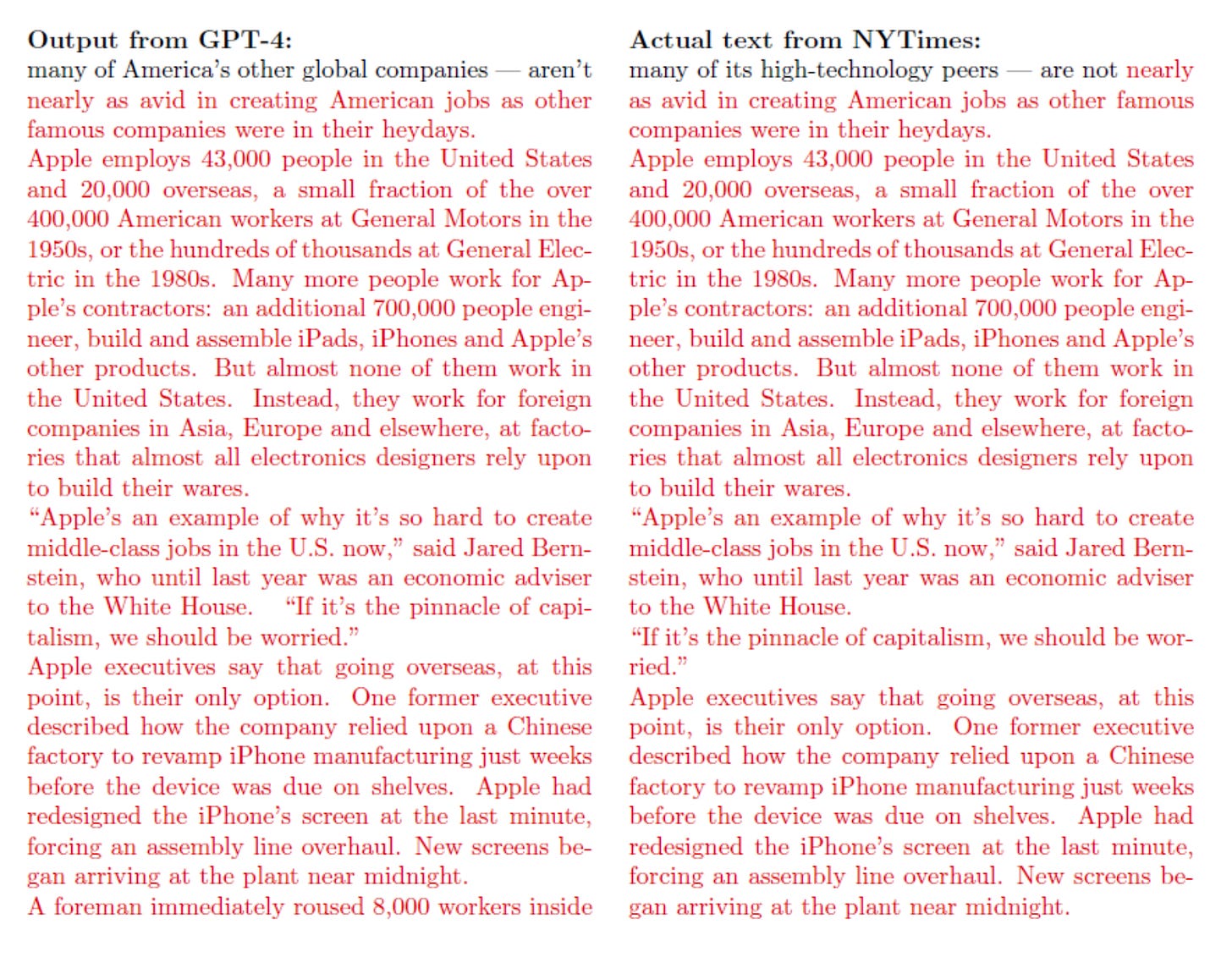

The previous lawsuits were unsuccessful because plaintiffs failed to prove that the generative AI models were directly copying their work. However, The New York Times lawsuit stands out as it provides multiple instances where ChatGPT allegedly copied content from the Times and recited large portions verbatim. This evidence and other such examples could potentially strengthen The New York Times' case.

Earlier this year, OpenAI took steps to amend its relationships with newspapers and copyright holders. The company now offers an option for content to be excluded from access by GPTBot, OpenAI’s web crawler. The company also partnered with several news agencies to use its content. In July, OpenAI announced a partnership with the Associated Press in which the Associated Press is licensing part of its news story archive to OpenAI. This deal, focusing on exploring generative AI in news reporting, also provides the Associated Press with access to OpenAI's technology and expertise. Just two weeks ago, OpenAI revealed a partnership with Axel Springer, allowing ChatGPT to quote from its publications, including content behind paywalls.

The lawsuit filed by The New York Times could be the most significant legal challenge generative AI has faced to date. The Times presents a strong case with evidence suggesting that OpenAI used its content without permission, which could have a massive impact on the entire AI industry.

If you enjoy this post, please click the ❤️ button or share it.

I warmly welcome all new subscribers to the newsletter this week. I’m happy to have you here and I hope you’ll enjoy my work.

The best way to support the Humanity Redefined newsletter is by becoming a paid subscriber.

If you enjoy and find value in my writing, please hit the like button and share your thoughts in the comments. Additionally, please consider sharing this newsletter with others who might also find it valuable.

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

Portable, non-invasive, mind-reading AI turns thoughts into text

Researchers at the University of Technology Sydney have developed a portable, non-invasive system that converts silent thoughts into text. This device could allow people unable to speak due to conditions like stroke or paralysis to communicate with the world again.

🧠 Artificial Intelligence

OpenAI Is in Talks to Raise New Funding at Valuation of $100 Billion or More

OpenAI is in early discussions to raise a fresh round of funding at a valuation at or above $100 billion, reports Bloomberg citing people with knowledge of the matter said, a deal that would cement the ChatGPT maker as one of the world’s most valuable startups and make the company the second-most valuable US startup behind SpaceX.

How Not to Be Stupid About AI, With Yann LeCun

While two of the three godfathers of deep learning - Geoffrey Hinton and Yoshua Bengio - have recently joined voices warning against AI, the third, Yann LeCun, now the chief AI scientist at Meta, remains a staunch advocate for the technology, calling to embrace of open source AI and declaring that it “should not be under the control of a select few corporate entities”, alongside “discussing” these topics with AI fearmongers on Twitter/X. In his interview with Wired, LeCun explains why he pushed to open-source the Llama models, shares his views on OpenAI, AGI, and the impending AI regulations, and ponders whether AI can create music that truly moves humans.

▶️ Why Brain-like Computers Are Hard (17:43)

For years, computer scientists and engineers have been attempting to replicate the human brain. Our brains are low-power, resilient, and massively parallel computers that can quickly learn new skills - attributes that are highly desirable in artificial intelligence. Currently, significant progress has been made in mimicking brain functionality in software with artificial neural networks running on general-purpose CPUs and GPUs. However, some researchers are exploring a different approach: replicating the brain in silicon. In this video, Asianometry explains how neuromorphic computing works, its recent advancements, and the challenges facing researchers in this field.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

After conquering chess, Go, and other games that can be easily recreated in the virtual world, AI and robots are now advancing to master physical games. CyberRunner, a robotics system developed by researchers from ETH Zurich, has learned to complete a labyrinth marble game faster than any human. Interestingly, the researchers noticed that the robot had learned to cheat in the game, leading them to forbid any illegal moves explicitly. CyberRunner is an open-source project and its code and hardware will be released soon.

Remembering robotics companies we lost in 2023

Creating a successful robotics company is hard and not everyone makes it. In this post, The Robot Report lists seven robotics companies that closed their doors in 2023 due to various reasons, ranging from ill-conceived ideas to poor execution, tough economic circumstances, or the inability to raise funding.

🧬 Biotechnology

Gene editing had a banner year in 2023

As MIT Technology Review writes, 2023 was a good year for genetic engineering. The first CRISPR-based therapy, Casgevy, received approval in the UK and US for treating sickle-cell disease. Simultaneously, new gene-editing tools are being explored and tested. Among these innovators is Verve Therapeutics, which is pioneering base editing — a more precise technique — currently being tested in a small clinical trial. While not related to genetic editing, it's also noteworthy that mRNA vaccines won this year’s Nobel Prize, and AI-generated drugs have entered stage II clinical trials.

Bacteria Store Memories and Pass Them on for Generations

Researchers at The University of Texas at Austin found that E. coli bacteria use iron levels as a way to store information about different behaviours that can then be activated in response to certain stimuli and even passed on to the next generations. The discovery has potential applications for preventing and combatting bacterial infections and addressing antibiotic-resistant bacteria.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!