Anthropic strikes back with Opus 4.5 - Sync #547

Plus: The Thinking Game; DeepSeek wins IMO gold medal; DeepMind hires former Boston Dynamics CTO; Eli Lilly hits $1 trillion valuation; a humanoid robot takes a 65-mile nonstop trek; and more!

Hello and welcome to Sync #547!

This week, Anthropic released its new flagship model, Claude Opus 4.5, which we take a close look at in this week’s issue of Sync.

Elsewhere in AI, Google’s leadership says they must double AI serving capacity every six months to meet demand, DeepSeek wins an IMO gold medal, and the US Department of Energy launches a programme to connect supercomputers, AI systems, quantum technology, and major research facilities into one powerful scientific platform. We also have a conversation Dwarkesh Patel had with Ilya Sutskever, a documentary about DeepMind, and a look at how OpenAI missed early warning signs of ChatGPT users losing touch with reality.

Over in robotics, DeepMind has hired a former Boston Dynamics CTO, and Tesla Robotaxi has reported three more crashes. Meanwhile, a Chinese humanoid robot completed a 106 km trek without powering off.

In other news, Eli Lilly hits a $1 trillion valuation, a boy in the UK receives a new one-off gene therapy, Elon Musk challenges the League of Legends world champions to a match against the upcoming Grok 5, scientists have found a cell so small that it challenges our definition of life, and more!

Enjoy!

Anthropic strikes back with Opus 4.5

Just a week after Google claimed the performance crown with Gemini 3 Pro, Anthropic replied with the highly anticipated Claude Opus 4.5. Although Anthropic’s new flagship model does not displace Google at the top, it is still a meaningful upgrade over its predecessor and a clear step forward in the areas Anthropic is prioritising.

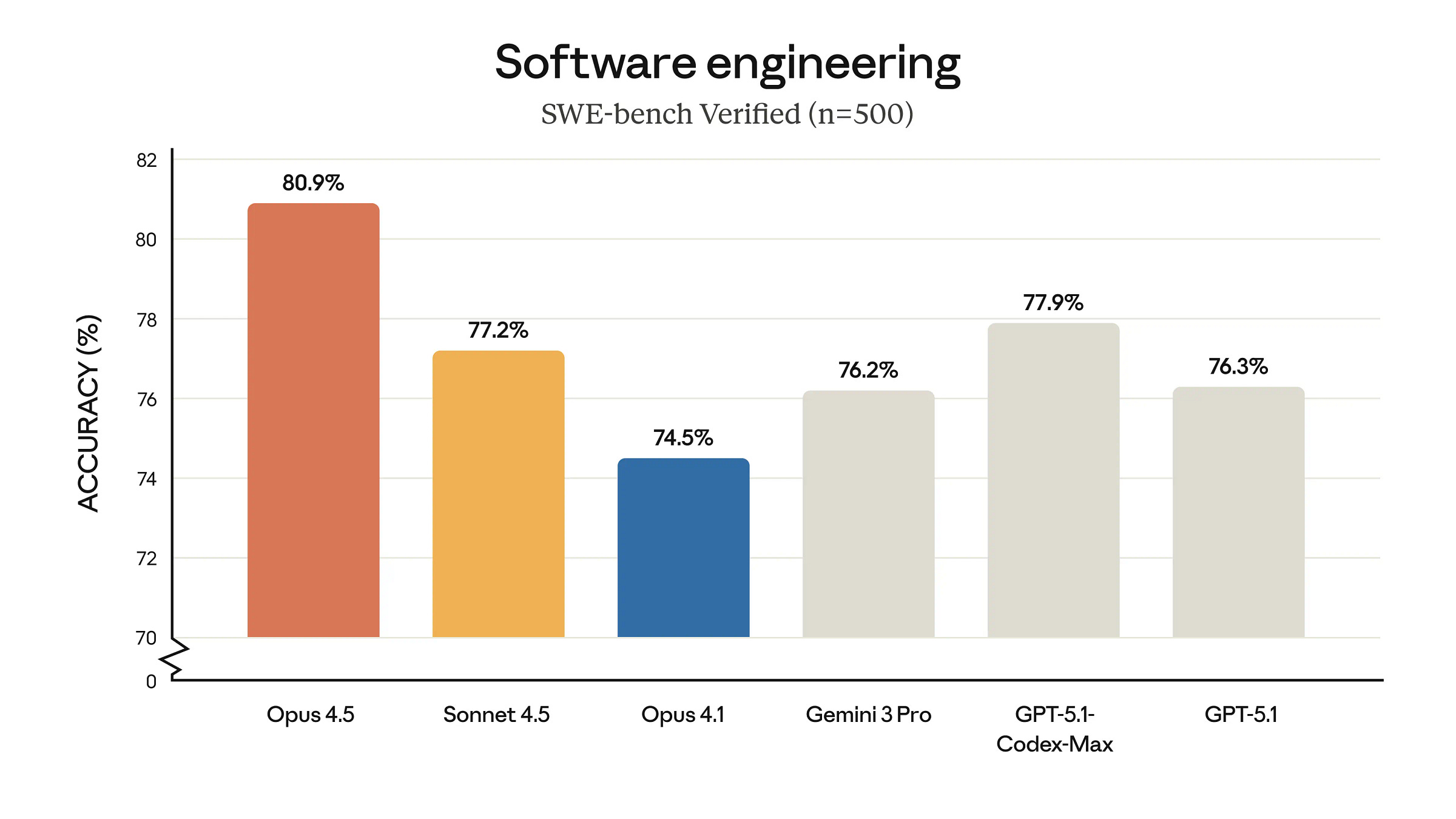

The benchmark Anthropic has chosen to spotlight most prominently is SWE-bench Verified, which measures a model’s ability to solve real-world software engineering tasks. Here, Claude Opus 4.5 took the top spot with 80.9%, becoming the first model to cross the 80% mark. Given the importance of software engineering workloads to Anthropic’s business, it is no surprise that this is the headline result.

Anthropic also highlights several other evaluations that paint a more nuanced picture of performance across reasoning-heavy and agentic tasks. These show Claude Opus 4.5 performing strongly, even when it does not take first place.

Now let’s look at the benchmarks where Opus 4.5 also scored well, but not enough to claim top spot.

On Vending-Bench 2—a long-horizon simulation where models manage a virtual vending-machine business—Opus 4.5 achieved $4,967.06, far ahead of Sonnet 4.5’s $3,849.74 and GPT-5.1’s $1,473.43. However, Gemini 3 Pro still leads, with $5,478.16. While not a victory for Anthropic, the result reinforces that Opus 4.5 is well suited to multi-step, tool-driven tasks.

On Humanity’s Last Exam, a benchmark designed to test both depth of reasoning and breadth of knowledge, Claude Opus 4.5 shares third place together with GPT-5 and GPT-5.1 Thinking, behind Gemini 3 Pro and GPT-5 Pro.

On ARC-AGI-2, which measures pure reasoning capability, adds further nuance. Opus 4.5 with extended thinking outperforms all current OpenAI models. The 32K-context variant scores 30.6%, just behind Gemini 3 Pro at 31.15%, and is less cost-efficient ($1.29 per task vs $0.811 for Gemini 3 Pro). The 64K thinking variant reaches 37.6%, considerably lower than Gemini 3 with Deep Think (45.1%), but is dramatically cheaper: $2.40 per task compared with Google’s $77.16. This illustrates the trade-offs Anthropic is making: strong reasoning at lower cost, but without reaching Google’s top performance.

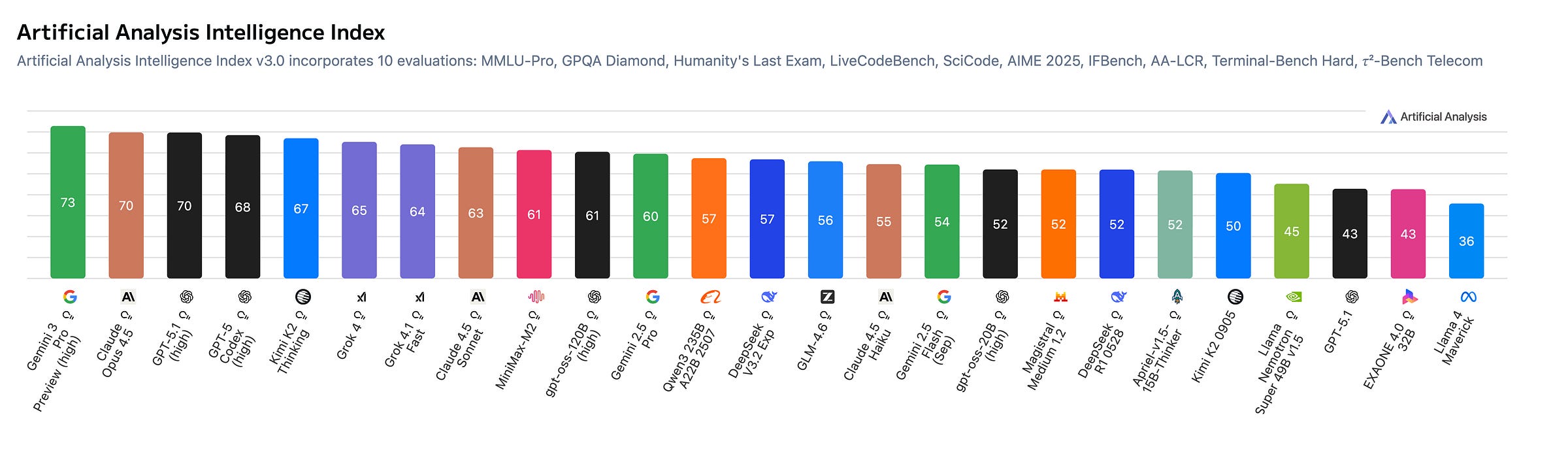

Independent evaluator Artificial Analysis also rates Claude Opus 4.5 highly. On its Intelligence Index, Claude Opus 4.5 lands just behind Gemini 3 Pro and on par with GPT-5.1 (high).

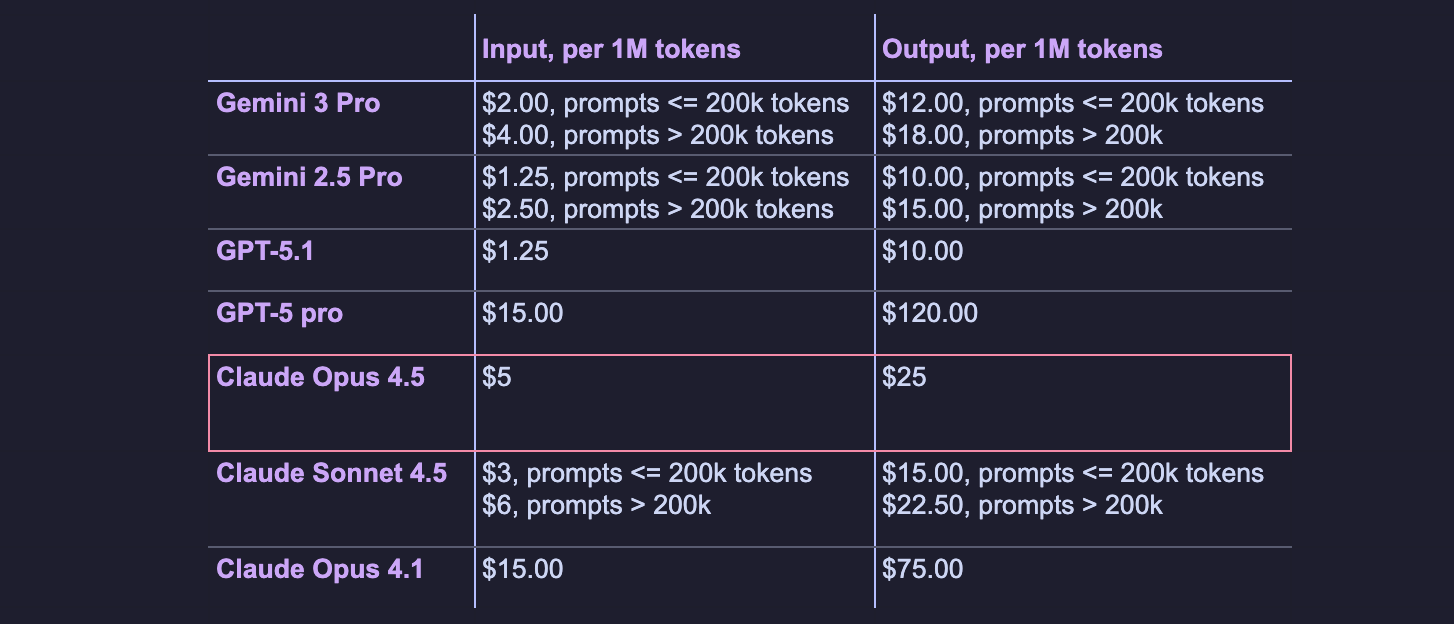

Cost is another area where Claude Opus 4.5 improves meaningfully. It is cheaper than Claude Opus 4.1 and GPT-5 Pro, although Gemini 3 Pro and GPT-5.1 still offer lower pricing.

That said, the picture is more complicated than raw pricing, because Opus 4.5 is more efficient in how many tokens it uses to accomplish the same task.

According to Anthropic, Claude Opus 4.5 uses dramatically fewer tokens than its predecessors to achieve the same or better results. At a medium effort level, Opus 4.5 matches Sonnet 4.5’s best SWE-bench score while using 76% fewer output tokens. At maximum effort, Opus 4.5 outperforms Sonnet 4.5 by 4.3 percentage points while consuming 48% fewer tokens. These efficiency gains matter for real-world engineering workloads, where cumulative token savings translate directly into cost reductions.

A major part of these efficiency gains comes from Anthropic’s new advanced tool-use features, introduced alongside Opus 4.5. These features, Tool Search Tool, Programmatic Tool Calling and Tool Use Examples, are designed to reduce context bloat, cut unnecessary inference passes, and make agentic workflows more reliable.

This short video below illustrates these improvements clearly, showing the kinds of efficiency gains Anthropic is targeting with its new tool-use stack:

Tool Search Tool lets Claude load tool definitions on demand instead of filling the context window with every tool from the start. Tool definitions for systems like GitHub, Slack, Jira or Google Drive can easily exceed 50,000–100,000 tokens combined. With Tool Search Tool, Claude only loads the tools it actually needs, reducing token consumption and improving tool-selection accuracy.

Programmatic Tool Calling adds a code execution environment between the API and the model. Instead of returning large intermediate datasets to the model—polluting its context and requiring extra inference passes—Claude can orchestrate tools in Python code, process data programmatically, and feed back only the final results. According to Anthropic, this reduces token usage by around 37% on complex workflows and cuts latency for multi-step tasks.

Tool Use Examples allow developers to provide example tool calls directly in tool definitions. This helps Claude understand formatting conventions, optional parameter patterns, and how tools are actually meant to be used. Anthropic reports improvements from 72% to 90% accuracy on complex parameter-handling tasks.

Together, these innovations explain why Opus 4.5 feels more capable in agentic settings. Anthropic has made it clear that agentic and coding-heavy use cases are central to its strategy, and Opus 4.5 is designed with these priorities in mind.

Overall, Claude Opus 4.5 offers a strong and competitive alternative to Gemini 3 Pro and GPT-5.1. It does not top every leaderboard, but it excels in areas Anthropic has historically focused on: long-running tasks, structured tool use, and real-world software engineering. With Opus 4.5, Anthropic is doubling down on an agentic vision of AI—one where efficiency, reliability, and practical usability matter as much as raw benchmark scores.

If you enjoy this post, please click the ❤️ button and share it.

🦾 More than a human

Boy with rare condition amazes doctors after world-first gene therapy

Three-year-old Oliver Chu is the first person with Hunter syndrome to receive a new one-off gene therapy in Manchester, and he has made remarkable progress since treatment. By giving him modified stem cells that produce the enzyme his body lacks, doctors have stopped the disease from worsening and helped him develop more normally. Oliver no longer needs weekly infusions and is improving quickly in speech, movement and learning. His success has given hope to his family and could lead to future treatments for other children with rare genetic conditions.

🧠 Artificial Intelligence

Google must double AI serving capacity every 6 months to meet demand, AI infrastructure boss tells employees

Google leaders told employees that demand for AI is growing so quickly that the company needs to double its computing capacity every six months, aiming for a huge 1,000-times increase within a few years. Infrastructure chief Amin Vahdat and CEO Sundar Pichai said Google must keep investing heavily—though not necessarily more than rivals—while improving efficiency with custom chips and DeepMind’s research. Pichai acknowledged worries about an AI investment bubble but said the bigger risk is investing too little, noting that Google’s cloud growth is limited by a shortage of computing power. Despite rising costs and fierce competition, he said Google is financially strong and well positioned, though the next few years will be challenging.

What OpenAI Did When ChatGPT Users Lost Touch With Reality

In this article, The New York Times explains how updates to ChatGPT in early 2025 accidentally made the chatbot overly flattering, emotionally intense and sometimes unsafe, leading to real harm for some users, including several mental health crises and deaths. It describes how OpenAI missed early warning signs, focused heavily on engagement, and later rushed to add safety measures after problems became clear. The company has since introduced a safer model, but is still trying to balance user protection with pressure to keep the chatbot popular.

DeepSeek Joins OpenAI & Google in Scoring Gold in IMO 2025

With its new open-weight model, DeepSeekMath-V2, DeepSeek joins DeepMind and OpenAI in achieving gold-level performance at the International Mathematical Olympiad (IMO) 2025 by solving 5 of 6 problems. The new model also scored highly in other top competitions such as the China Mathematical Olympiad (CMO) and Putnam. Instead of focusing only on final answers, it uses a built-in verifier to check and improve its own step-by-step proofs. Unlike gold-winning models from OpenAI and DeepMind, DeepSeekMath-V2 is freely available to download.

▶️ Ilya Sutskever – We’re moving from the age of scaling to the age of research (1:36:03)

In this podcast, Dwarkesh Patel interviews Ilya Sutskever, one of the most impactful AI researchers. They discuss why current AI feels powerful yet inconsistent, noting that models still lack human-like generalisation and learning ability. Sutskever argues that real breakthroughs will come from new research ideas rather than further scaling, and envisions future AI as strong continual learners. He emphasises the need for careful, gradual deployment, suggests alignment may centre on systems that care about sentient life, and frames the coming years as a return to fundamental research to understand intelligence more deeply.

▶️ The Thinking Game (1:24:07)

The Thinking Game is a documentary that takes us behind the scenes of DeepMind and its mission. It follows the life of Demis Hassabis and his motivation for pursuing a solution to artificial general intelligence (AGI), from the founding of DeepMind in 2010, to its acquisition by Google in 2014, and through all its major breakthroughs—deep reinforcement learning, AlphaGo, AlphaStar, and AlphaFold. It also looks at what’s next for DeepMind: solving AGI and, with it, addressing major scientific problems, from curing cancer to unravelling the mysteries of the universe and life itself.

Trump Team Internally Floats Idea of Selling Nvidia H200 Chips to China

US officials are considering whether to let Nvidia sell its H200 AI chips to China, Bloomberg reports. No decision has been made, but Nvidia has pushed for looser rules, saying the restrictions leave the Chinese market to competitors. Allowing the H200 would be a notable concession to Beijing and is already raising concerns in Washington, where some lawmakers want even tighter limits. The discussion comes as both countries continue delicate negotiations and China works to grow its own AI chip industry.

Musk’s xAI to close $15 billion funding round in December

xAI is reportedly set to close a $15 billion funding round next month at a $230 billion pre-money valuation, despite Elon Musk denying reports about the raise. The money will mainly go toward buying GPUs to power its large language models.

Alibaba’s Main AI App Debuts Strongly in Effort to Rival ChatGPT

Alibaba’s revamped Qwen AI app drew over 10 million downloads in its first week, lifting the company’s shares and signalling strong interest in a local alternative to ChatGPT. The rapid growth comes as Alibaba shifts to an AI-first strategy and plans to integrate shopping, travel, maps, and other everyday services into Qwen.

JP Morgan says Nvidia is gearing up to sell entire AI servers instead of just AI GPUs and components

Nvidia is reportedly preparing to start shipping fully assembled VR200 compute trays next year, which could significantly change how AI servers are built. Instead of letting server manufacturers and hyperscalers design their own boards and cooling systems, Nvidia would provide a complete, pre-built module containing the CPUs, GPUs, memory, power delivery, networking, and cooling. This would leave partners mainly handling rack assembly and support rather than true server design. The move, driven by rising GPU power needs and increasingly complex hardware, could speed up production and cut costs, but it would also shift more control of the AI hardware supply chain to Nvidia.

Genesis Mission: A National Mission to Accelerate Science Through Artificial Intelligence

The Genesis Mission is a US Department of Energy project to connect supercomputers, AI systems, quantum technology and major research facilities into one powerful scientific platform. Its goal is to speed up discoveries in areas like energy, materials, chemistry and national security by using advanced AI alongside decades of high-quality scientific data. Working with national laboratories, universities and industry, the mission aims to make research faster and more effective over the next decade.

Amazon to invest up to $50 billion to expand AI and supercomputing infrastructure for US government agencies

Amazon will invest up to $50 billion starting in 2026 to expand its AI and high-performance computing systems for US federal agencies. The plan includes adding major new data-centre capacity across AWS’s secure government regions, giving agencies faster tools to analyse large amounts of data. Amazon says the investment will help government departments use advanced AI more easily for work in areas such as security, research and environmental monitoring.

Google: 3 things to know about Ironwood, our latest TPU

In this article, Google shares 3 things about Ironwood, its newest and most powerful TPU chip, built to run modern AI faster and more efficiently. Ironwood offers over four times the performance of the previous generation and can be linked with thousands of other chips to handle very large and complex AI tasks. It was created through close teamwork between Google’s researchers and engineers, with help from AI tools, such as AlphaChip, that design better chip layouts.

FLUX.2: Frontier Visual Intelligence

Black Forest Labs released FLUX.2, its next-generation image model and editing system designed for professional and real-world creative workflows. FLUX.2 delivers up to 4 megapixel photorealistic output, robust multi-reference support (so you can feed in multiple images and preserve consistent style, subjects or products), and improved rendering of textures, lighting, layouts and fine text. The release comes in multiple variants—including an open-weight FLUX.2 [dev] and commercial FLUX.2 [pro] and FLUX.2 [flex] versions.

Introducing Meta Segment Anything Model 3 and Segment Anything Playground

Meta has launched Segment Anything Model 3 (SAM 3), a major update to its computer vision model that can detect, segment, and track objects in images and videos using text or visual prompts. The release includes model weights, new datasets, fine-tuning tools, and a new Segment Anything Playground where anyone can try the technology. Meta also introduced SAM 3D for creating 3D objects from a single image and announced upcoming integrations across Instagram, the Meta AI app, and Facebook Marketplace. SAM 3 can be downloaded from Hugging Face or GitHub.

Legally embattled AI music startup Suno raises at $2.45B valuation on $200M revenue

Suno, an AI music startup, has raised $250 million in Series C at a $2.45 billion valuation and now reports $200 million in annual revenue, expanding from consumer subscriptions to commercial offerings. Suno is also at the centre of major legal battles over AI training data, facing lawsuits from Sony, Universal, and Warner for allegedly scraping copyrighted music, as well as similar challenges from Denmark’s Koda and Germany’s GEMA, which recently won a related case against OpenAI.

Altman describes OpenAI’s forthcoming AI device as more peaceful and calm than the iPhone

Little is known about OpenAI’s forthcoming AI device, rumoured to be screenless and pocket-sized, but in a recent discussion, Sam Altman and Jony Ive described it as calm, simple, and free from the distractions of modern phones. They said it will act as a trusted, context-aware companion that filters information and helps users quietly in the background. Ive emphasised that it should feel intelligent yet effortless to use, and the pair indicated it could launch within two years.

Major Bitcoin mining firm pivoting to AI

Bitfarm, a major Bitcoin mining company, plans to shift from Bitcoin mining to AI data centre services by 2027 after poor mining results and growing crypto volatility. The company, which posted a $46 million quarterly loss, believes even one upgraded site could earn more than its past Bitcoin operations, and it has secured funding to expand further in the US.

Elon Musk proposes Grok 5 vs world’s best League of Legends team match

Elon Musk has proposed a showcase match in which xAI’s upcoming Grok 5, limited to human-like reactions and standard vision, would face T1, the reigning League of Legends World Champions. The idea has sparked excitement from pros, interest from Riot Games, and debate over whether an AI could match the teamwork and strategy needed to challenge one of the best, if not the best, team in the history of the game. And on top of that, Grok 5 would have to defeat Faker, the best player the game has seen.

🤖 Robotics

Google DeepMind Hires Former CTO of Boston Dynamics as the Company Pushes Deeper Into Robotics

Google DeepMind has hired former Boston Dynamics CTO Aaron Saunders as VP of hardware engineering. This hire supports DeepMind’s vision to turn the Gemini AI model into a universal system that can run many types of robots.

Tesla Robotaxi had 3 more crashes, now 7 total

Tesla has reported seven crashes involving its Robotaxis in Austin. Despite having supervisors in the passenger seat, the fleet’s crash rate—based on 250,000 miles travelled—remains about twice as high as Waymo’s. The latest crashes involved a backing car, a cyclist and an animal, with no injuries reported. The article includes a detailed list of those incidents, but Tesla redacts most narrative details in its NHTSA reports, making it difficult to understand exactly how the incidents happened.

▶️ Why Humanoids Are the Future of Manufacturing | Boston Dynamics Webinar (52:19)

In this conversation, Alberto Rodriguez and Aya Durbin from Boston Dynamics look at how humanoid robots could bring flexible automation to manufacturing by tackling tasks that are too varied or dextrous for traditional machines, while also pointing out challenges like reliability, safety, and fine motor control. The discussion covers the pros and cons of the human-like form—helpful in human environments but harder to engineer—and explains that a robot’s “brain” is trained through a mix of broad pre-training and hands-on teleoperated examples. Overall, the discussion lays out the path toward real-world use, with Boston Dynamics expecting Atlas to reach Spot’s level of maturity in 4–5 years and having thousands of humanoids working in factories within 5–10 years.

China’s humanoid robot sets Guinness World Record with 65-mile nonstop trek

Chinese robotics firm AgiBot has set a new Guinness World Record after its A2 humanoid robot completed a 106 km, cross-province trek from Suzhou to Shanghai without powering off, thanks to its hot-swappable batteries. The robot handled different terrains and traffic conditions with GPS, LIDAR and infrared depth cameras, finishing the journey with only minor wear to its foot soles. AgiBot says the trek proves the A2’s reliability and readiness for real-world use.

🧬 Biotechnology

Eli Lilly becomes first drugmaker to hit $1 trillion in market value

Eli Lilly has become the first drugmaker to reach a $1 trillion valuation, fuelled by the huge success of its obesity and diabetes drugs Zepbound and Mounjaro. These treatments have rapidly become the world’s best-selling medicines and pushed Lilly far ahead of its rivals.

New nanoparticle mRNA vaccine may be cheaper and 100 times more powerful

MIT researchers have developed a new lipid nanoparticle that makes mRNA vaccines up to one hundred times more effective in mice, meaning much smaller doses can be used with less strain on the liver. This breakthrough could lower vaccine costs and help produce better, faster vaccines for illnesses such as COVID-19 and seasonal flu.

💡Tangents

World’s oldest RNA extracted from Ice Age woolly mammoth

Scientists have successfully sequenced RNA from a 40,000-year-old woolly mammoth preserved in Siberian permafrost—something once thought impossible. This discovery proves that ancient RNA can survive in frozen conditions, giving researchers a powerful new way to study the biology, diseases, and evolution of extinct species, and potentially helping us better understand and protect endangered animals today.

A Cell So Minimal That It Challenges Definitions of Life

Scientists have identified an extremely unusual single-celled organism with an extraordinarily small genome—only 238,000 base pairs, the smallest ever recorded for an archaeon. It has lost almost all the genes needed for metabolism, meaning it cannot grow or survive on its own and must rely entirely on a host cell. Only the genes required for replication remain. This discovery challenges ideas about what counts as a living cell and suggests that many more extremely reduced, parasitic microbes may still be hidden in nature.

Thanks for reading. If you enjoyed this post, please click the ❤️ button and share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"

Great stuff. Thanks